Neither an encyclopaedia nor Google are able answer questions that appear as simple as this one: Who invented the first computer? If we start to dig deeper, we soon find many different answers, and most of them are correct. Searching for an answer invites us to review the history of computing, to meet its pioneers and to discover that it is still not entirely clear what a computer is.

Charles Babbage and the mechanical computer

Before Babbage, computers were humans. This was the name given to people who specialised in making numerical calculations —those who spent long hours performing arithmetic operations, repeating the processes over and over again and leaving the results of their calculations written in tables, which were compiled in valuable books. These tables made life much easier for other specialists, whose job it was to use these results to perform all sorts of tasks: from the artillery officers who decided how to aim the cannons, to the tax collectors who calculated taxes, to the scientists who predicted the tides or the movement of the stars in the heavens.

Thus, at the end of the 17th century, Napoleon commissioned Gaspard de Prony (22 July 1755 – 29 July 1839) with the revolutionary task of producing the most precise logarithmic and trigonometric tables (with between 14 and 29 decimal places) ever made, in order to refine and facilitate the astronomical calculations of the Paris Observatory, and to be able to unify all the measurements made by the French administration. For this colossal task, de Prony had the brilliant idea of dividing the most complex calculations into simpler mathematical operations that could be performed by less qualified human computers. This way of speeding up the work and avoiding errors was one of the things that inspired English polymath Charles Babbage (26 December 1791 – 18 October 1871) to take the next step: replacing human computers with machines.

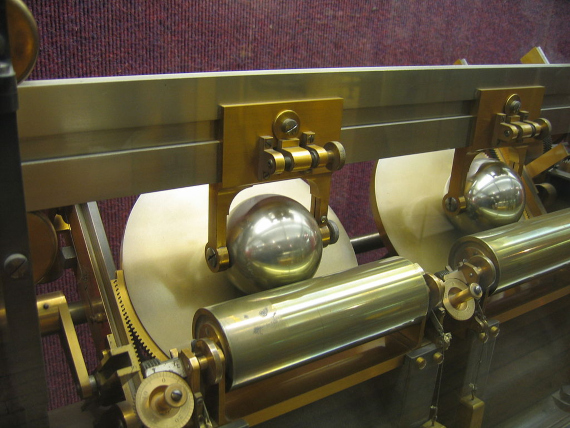

Babbage is considered by many to be the father of computing because of that vision, which never really came true by his efforts. His first attempt was the Difference Engine, which he began to build in 1822, based on the principle of finite differences, in order to perform complex mathematical calculations by means of a simple series of additions and subtractions, avoiding multiplications and divisions. He even created a small calculator that proved that his method worked, but he was not able to build a differential engine to fill in those coveted logarithmic and trigonometric tables with accurate data. Lady Byron, Ada Lovelace’s mother, claimed to have seen a functional prototype in 1833 —albeit one limited in both complexity and precision— but by that time Babbage had already exhausted the funding provided by the British government.

Far from being discouraged by this setback, mathematician, philosopher, engineer and inventor Charles Babbage doubled down. He concentrated all his energies on developing the Analytical Engine, which was much more ambitious since it would be capable of performing even more complex calculations by computing multiplications and divisions. Once again, Babbage never got past the design stage, but it was those designs he began in 1837 that made him, perhaps not the father of computing, but definitely a prophet of what was to come.

Babbage’s thousands of pages of annotations and sketches about the analytical engine contained components and processes that are common to any modern computer: a logical unit to perform arithmetic calculations (the equivalent of a processor or CPU), a control structure with instructions, loops and conditional branching (like a programming language), and data storage on punch cards (an early version of memory), an idea he borrowed from the Jacquard machine. Babbage even thought of recording the results of the calculations on paper, using an output device that was a precursor to today’s printers.

The Thomson brothers and analogue computers

In 1872, one year after Charles Babbage died, the great physicist William Thomson (Lord Kelvin) invented a machine capable of performing complex calculations and predicting the tides in a given place. It is considered the first analogue computer, sharing honours with the differential analyser built in 1876 by his brother James Thomson. The latter device was a more advanced and complete version, which managed to solve differential equations by integration, using wheel and disc mechanisms.

However, it took several more decades until, well into the 20th century, H.L. Hazen and Vannevar Bush perfected the idea of the mechanical analogue computer at MIT (Massachusetts Institute of Technology). Between 1928 and 1931, they built a differential analyser that was truly practical since it could be used to solve different problems, and as such, following that criterion, it could be considered the first computer.

Turing and the universal computing machine

By this point, these analogue machines could already replace human computers in some tasks and were calculating faster and faster, especially when their gears began to be replaced by electronic components. But they still had one serious drawback. They were designed to perform one type of calculation and if they were to be used for another, their gears or circuits had to be replaced.

That was the case until 1936, when a young English student, Alan Turing, thought of a computer that would solve any problem that could be translated into mathematical terms and then reduced to a chain of logical operations with binary numbers, in which only two decisions could be made: true or false. The idea was to reduce everything (numbers, letters, pictures, sounds) to strings of ones and zeros and use a recipe (a program) to solve the problems in very simple steps. The digital computer was born, but for now it was only an imaginary machine.

Babbage’s analytical machine would probably have met (almost a century earlier) the conditions for being a universal Turing machine… if it had ever been built. At the end of the Second World War —during which he helped to decipher the Enigma code of the Nazi coded messages— Turing created one of the first computers similar to modern ones, the Automatic Computing Engine, which in addition to being digital was programmable; in other words, it could be used for many things by simply changing the program.

Zuse and the digital computer

Although Turing established what a computer should look like in theory, he was not the first to put it into practice. That honour goes to an engineer who was slow to gain recognition, in part because his work was financed by the Nazi regime in the midst of a global war. On 12 May 1941, Konrad Zuse completed the Z3 in Berlin, which was the first fully functional (programmable and automatic) digital computer. Just as the Silicon Valley pioneers would later do, Zuse successfully built the Z3 in his home workshop, managing to do so without electronic components, but using telephone relays. The first digital computer was therefore electromechanical, and it was not made into an electronic version because the German government ruled out financing it, as it was not considered “strategically important” in wartime.

On the other side of the war, the Allied powers did attach importance to building electronic computers, using thousands of vacuum tubes. The first was the ABC (Atanasoff-Berry Computer), created in 1942 in the United States by John Vincent Atanasoff and Clifford E. Berry, which however was neither programmable nor “Turing-complete“. Meanwhile, in Great Britain, two of Alan Turing’s colleagues —Tommy Flowers and Max Newman, who also worked in Bletchley Park deciphering Nazi codes— created the Colossus, the first electronic, digital and programmable computer. But Colossus, like ABC, also lacked a final detail: it was not “Turing-complete”.

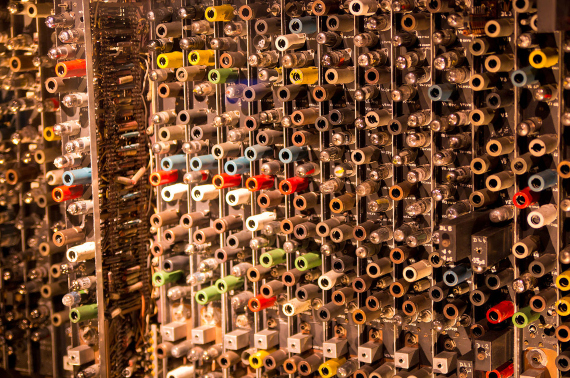

The first computer that was Turing-complete, and that had those four basic features of our current computers was the ENIAC (Electronic Numerical Integrator and Computer), secretly developed by the US army and first put to work at the University of Pennsylvania on 10 December 1945 in order to study the feasibility of the hydrogen bomb. In order to perform other calculations, its “program” had to be changed, that is, a multitude of cables and switches had to be manually repositioned. ENIAC, designed by John Mauchly and J. Presper Eckert, occupied 167 m2, weighed 30 tons, consumed 150 kilowatts of electricity and contained some 20,000 vacuum tubes.

ENIAC was soon surpassed by other computers that stored their programs in electronic memories. The vacuum tubes were replaced first by transistors and eventually by microchips, with which the computer miniaturization race commenced. But that giant machine, built by the great winner of the Second World War, launched our digital age. Nowadays, it would be unanimously considered the first true computer in history if it were not for Konrad Zuse (1910-1995), who decided in 1961 to reconstruct his Z3, which had been destroyed by a bombing in 1943. The replica was exhibited at the Deutsches Museum in Munich, where it is found today. Several decades would pass until, in 1998, a Mexican computer scientist, Raúl Rojas, made an effort to study the Z3 in depth and managed to prove that it could be “Turing-complete”, something that not even its then deceased creator had considered.

Focused on making it work, Zuse was never aware that he had in his hands the first universal computing machine. In fact, he never made his invention work that way… So, is Charles Babbage, Konrad Zuse or Alan Turing the inventor of the computer? Was the Z3, Colossus or ENIAC the first modern computer? It depends. The question remains today as open as this one: What makes a machine a computer?

Comments on this publication