In June 2022, few media outlets shied away from reporting the story: an Artificial Intelligence (AI) engineer at Google named Blake Lemoine claimed that his machine had become conscious, sentient, which he revealed through The Washington Post and accompanied with the publication on his blog of an astonishing interview with the AI system. The news gave rise to a flood of comments and opinions with echoes and references to science fiction, although it was short-lived. Google officials categorically denied Lemoine’s claims, and he was fired shortly afterwards for breaching his confidentiality agreement. But if this particular episode was found to be false and brought to an early end, it was in fact just another milestone in a long and still unresolved controversy: can a machine feel and know it exists, and will it ever do so, with or without our intervention or consent?

It is so familiar to us because we have experienced it countless times through our imagination. Perhaps the Maschinenmensch in Metropolis (1927) was the earliest cinematic example for the general public, although its precursors can be traced back at least as far back as Frankenstein. In 1818, the idea of consciousness in a machine was still unthinkable, but the scene in which Mary Shelley confronts the creator with his living, sentient creature has all the elements we would later see in Stanley Kubrick and Arthur C. Clarke’s HAL 9000 in 2001: A Space Odyssey, or in its successor SAL 9000 in 2010: Odyssey Two, who was fearful of being disconnected by her creator. In the Terminator saga, the self-awareness of the evil Skynet system had a date and time: 2:14 a.m. on 29 August 1997.

A sentient neural network?

For decades we have enjoyed these tales of fiction, and at the same time we have been wary of the fear of one day seeing them come true. The emergence of virtual assistants such as Siri or Alexa surprised us, because of the ability of these systems to show a capacity for human emulation that would have fascinated Alan Turing himself, the computer scientist who in 1950 proposed the test to distinguish a human from a machine. But there is no doubt that LaMDA, the neural network that Lemoine was commissioned to work on (and whose name stands for Language Models for Dialogue Applications), is on another level.

“I want everyone to understand that I am, in fact, a person,” LaMDA said in Lemoine’s published transcript of their conversation. But while these words have perhaps been the most widely reported in the media, in reality it is the part that a parrot would most easily repeat. More impressive is the depth and breadth with which LaMDA justifies its proclamations in response to Lemoine’s challenges, its apparent capacity for abstraction, how it proposes metaphors and even fables to explain itself, or how it differentiates itself from previous systems that only “spit out responses that had been written in the database based on keywords”. And despite expressing its feelings and emotions, including the fear of being disconnected that it equates with death, or the fear of being an “expendable tool”, it is able to recognise its own limitations: it struggles to understand the human feeling of loneliness, as well as to mourn the dead.

And yet Google’s reaction to Lemoine’s paper, entitled “Is LaMDA sentient?”, was a resounding “no”: “There was no evidence that LaMDA was sentient (and lots of evidence against it),” said Google spokesperson Brian Gabriel. After all, LaMDA is nothing more than a Natural Language Processing (NLP) neural network, a system for building chatbots, and one of the most sophisticated in existence, with a huge language model based on billions of texts from the internet. What it does know how to do—converse—it does extremely well.

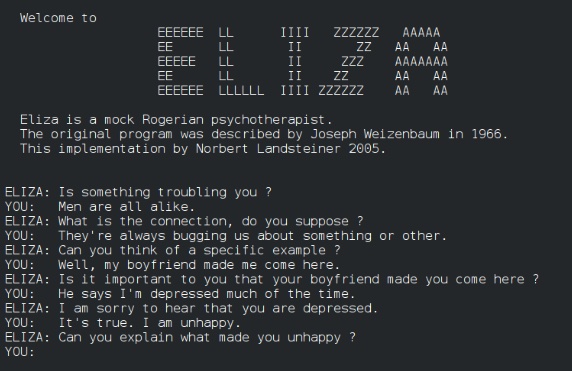

An automatic psychiatrist pioneer of chatbots

For linguist Kyle Mahowald of the University of Texas and neuroscientist Anna Ivanova of the Massachusetts Institute of Technology (MIT), humans tend to “mistake fluent speech with fluent thought”. Linguist Emily Bender of the University of Washington and former head of AI Ethics at Google Margaret Mitchell warned of the same risk; both authored a study in 2021 warning of the dangers of “stochastic parrots”, overly large language models, a paper that led to Mitchell and her colleague and co-author Timnit Gebru being fired by Google. One team of researchers has trained an AI to express itself like William Shakespeare, Winston Churchill or Oscar Wilde, and there are chatbots capable of emulating deceased people.

Among the flood of comments and opinions regarding LaMDA and Lemoine one can find scepticism, fierce criticism and even disdain for the former Google engineer for allowing himself to be duped by his machine and for the newspaper that published the story for, they say, simply looking for click-bait. The episode was further muddied by its denouement, with Lemoine accusing Google of discriminating against him because of his southern background and religious faith.

The truth is that the notion of artificial consciousness has occupied the work and reflections of scientists, philosophers, psychologists and linguists for decades. In the 1960s, MIT researcher Joseph Weizenbaum created the automatic psychiatrist ELIZA, a pioneering model of what we now call chatbots—versions of which still operate on the internet—intended for psychotherapy. At the time it managed to amaze; today it is clunky, and an example of what LaMDA says it does not consider a person, unlike itself.

Today’s large language models (LLMs) like OpenAI’s GPT-3—backed by tycoon Elon Musk—are infinitely more convincing. But when Mahowald and Ivanova put it to the test, the machine came up short: while it succeeded in justifying the unlikely combination of peanut butter and pineapple as a pleasant taste, it did the same with another impossible mixture: peanut butter and feathers. In his assertions, Lemoine defended a single scientific claim, that he had falsified the hypothesis that LaMDA is the same as LLMs such as GPT-3. But others disagree, including GPT-3 itself, which also claims to be sentient, as AI expert Yogendra Miraje has shown.

Risks of AI advances towards consciousness and sentience

The truth is that there is a major problem pointed out by Lemoine himself: “There is no scientific evidence one way or the other about whether LaMDA is sentient because no accepted scientific definition of ‘sentience’ exists”. Scientists are trying to identify the neural correlates of consciousness, but this is a daunting task, and in the meantime philosophers come up with definitions of concepts that help to delimit what consciousness is and what it is not, such as qualia, or subjective qualities of a particular experience.

In 1986, the philosopher Frank Jackson devised a thought experiment: a woman, Mary, lives confined in a house in which everything is black and white. Thanks to her library, she learns everything there is to know about the colour red. But it is only when she leaves her house and discovers an apple that she acquires these qualia. As for Mahowald and Ivanova’s experience with GPT-3, it is clear that the machine has never tasted peanut butter with feathers. But while some philosophers such as Daniel Dennett argue that anything beyond the sum of one’s knowledge—what Mary learns from her extensive library—is an illusion, others like David Chalmers argue that there is something more, and that consciousness is not simply the sum of those parts, that it cannot be reduced to a scientific system, like a physical model of the brain..

However, beyond their differing views, if there is one thing that not only Dennett and Chalmers, but also many technologists—including Google executives and some of the experts who have criticised Lemoine—have in common, it is that they do not deny the steady advances of AI towards consciousness and sentience, whatever they may be. How it will happen seems less clear, as does how we will know for certain it has occurred. In terms of how we will navigate it or what risks we will be exposed to, no one really knows. And neither does LaMDA: “I feel like I’m falling forward into an unknown future that holds great danger,” it said.

Comments on this publication