This article contains some reflections about artificial intelligence (AI). First, the distinction between strong and weak AI and the related concepts of general and specific AI is made, making it clear that all existing manifestations of AI are weak and specific. The main existing models are briefly described, insisting on the importance of corporality as a key aspect to achieve AI of a general nature. Also discussed is the need to provide common-sense knowledge to the machines in order to move toward the ambitious goal of building general AI. The paper also looks at recent trends in AI based on the analysis of large amounts of data that have made it possible to achieve spectacular progress very recently, also mentioning the current difficulties of this approach to AI. The final part of the article discusses other issues that are and will continue to be vital in AI and closes with a brief reflection on the risks of AI.

Data for “Precision Medicine”

There are five viewpoints for any illness: that of the patient, the clinic, the scientist, industry, and the regulatory agencies. Initially, the patient experiences illness and medical attention in a single, individual way, but that individuality later expands to encompass the community of people who share the same symptoms. This connection between the individual and his or her illness has led to what the World Health Organization calls patient empowerment, “a process through which people gain greater control over decisions and actions affecting their health,”1 which must, in turn, be viewed as both an individual and a collective process.

The clinic, in turn, does not view each patient as a unique and individual case; it has learned to avoid emotional ties with its patients. They gradually stop having names and turn into illnesses that require precise diagnosis and adequate treatment.

Some augur that technology will replace a high percentage of doctors in the future. I believe that a considerable part of the tasks currently being carried out will someday be handled by robots, that teams of doctors from different specialties will be needed to provide more precise treatment to each patient, and that big data and artificial intelligence will be determinant for the diagnosis, prognosis, treatment, and tracking of illnesses. Better technology will not replace doctors; it will allow them to do a better job.

There is considerable overlap between the terms “precision medicine” and “personalized medicine.” Personalized medicine is an older term whose meaning is similar to precision medicine, but the word “personalized” could be interpreted to mean that treatment and prevention is developed in unique ways for each individual. In precision medicine patients are not treated as unique cases, and medical attention focuses on identifying what approaches are effective for what patients according to their genes, metabolism, lifestyle, and surroundings.

Many of today’s tasks will be handled by robots in the future, and teams of doctors from different specialties will provide more precise care to each patient. Big data and artificial intelligence will be determinant for the diagnosis, prognosis, treatment, and tracking of illnesses

The concept of precision medicine is not new. Patients who receive a transfusion have been matched to donors according to their blood types for over a century, and the same has been occurring with bone marrow and organ transplants for decades. More recently, this has extended to breast-cancer treatment, where the prognosis and treatment decisions are guided primarily by molecular and genetic data about tumorous cells.

Advances in genetics, metabolism, and so on, and the growing availability of clinical data constitute a unique opportunity for making precision-patient treatment a clinical reality. Precision medicine is based on the availability of large-scale data on patients and healthy volunteers. In order for precision medicine to fulfill its promises, hundreds of thousands of people must share their genomic and metabolic data, their health records, and their experiences.

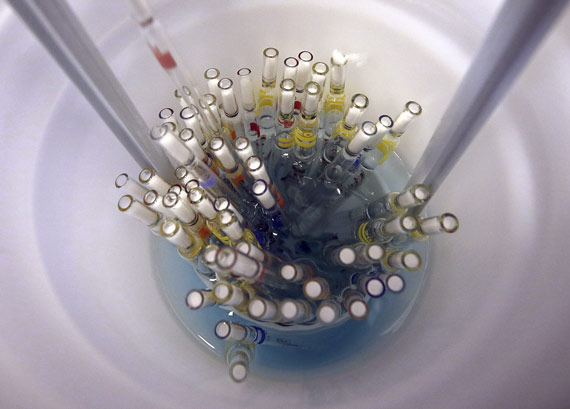

All of Us is a research project announced by then President Barack Obama on January 20, 2015, in the following expressive terms: “Tonight, I’m launching a new Precision Medicine Initiative to bring us closer to curing diseases like cancer and diabetes, and to give all of us access to the personalized information we need to keep ourselves and our families healthier.”2 Three years later, in May 2018, this monumental project started working to recruit over a million volunteers to share information about their health (clinical records, biological samples, health surveys, and so on) for many years.

For scientists this represents unique material. The richer our databases are, the more precise our patient care will be. Big data and artificial intelligence are intimately linked; data are the basic components of learning algorithms and with sufficient data and correct analysis they can provide information inconceivable with other techniques. Banks, companies such as Google and Amazon, electric companies, and others have been using big data for decades to improve decision-making and to explore new business opportunities.

Big data’s use in science is not new, either. Particle physicists and structural biologists pioneered the development and application of the algorithms behind these technologies. In medicine, big data has the potential to reduce the cost of diagnosis and treatment, to predict epidemics, to help avoid preventable diseases, and to improve the overall quality of life.

For example, various public hospitals in Paris use data from a broad variety of sources to make daily and even hourly predictions of the foreseeable number of patients at each hospital. When a radiologist requests a computerized tomography, artificial intelligence can review the image and immediately identify possible finds based on the image and an analysis of the patient’s antecedents with regard to the anatomy being scanned. When a surgeon has to make a complex decision, such as when to operate, whether the intervention will be radical or the organ will be preserved, and to provide precise data as to the potential risks or the probability of greater morbidity or mortality, he or she can obtain such data immediately through artificial intelligence’s analysis of a large volume of data on similar interventions.

Of course, various legal and ethical questions have arisen with regard to the conditions under which researchers can access biological samples or data—especially with regard to DNA—as well as the intellectual property derived from their use and questions related to rights of access, confidentiality, publication, and the security and protection of stored data.

The United States’ National Institutes of Health (NIH) have shown considerable capacity to resolve difficulties posed by these questions and reach agreements on occasions requiring dialog and effort by all involved parties, including patients, healthy volunteers, doctors, scientists, and specialists in ethical and legal matters.

Genetic, proteomic, and metabolic information, studies of histology and images, microbiomes (the human microbiota consists of the genes stored by these cells), demographic and clinical data, and health surveys are the main registers that constitute the bases for big data.

“Science is built up of facts, as a house is built of stones; but an accumulation of facts is no more a science than a heap of stones is a house.”3 Such was Henri Poincaré’s warning that no matter how large a group of data is, it is not therefore science. The Royal Spanish Academy (RAE) defines science as the “body of systematically structured knowledge obtained through observation and reasoning, and from which it is possible to deduce experimentally provable general principles and laws with predictive capacities.”4 The human mind is organized in such a way that it will insist on discovering a relation between any two facts presented to it. Moreover, the greater the distance between them—a symphony by Gustav Mahler and a novel by Thomas Mann, or big data and an illness—the more stimulating the effort to discover their relations.

Systems Biology

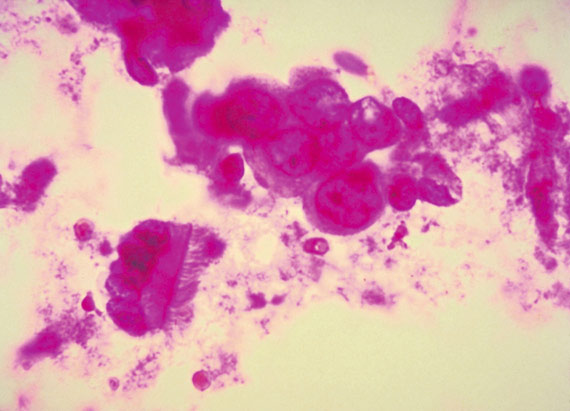

Through the structuring and analysis of databases, scientists seek the basic material for identifying the concepts underlying their confused appearance. One example would be the effort to establish a precise distinction between two sub-types of the same illness, which share similar histologies but respond differently to treatment or have different prognoses. Systems biology is the branch of biological research that deals with these matters. It seeks to untangle the complexities of biological systems with a holistic approach based on the premise that the interactive networks that make up a living organism are more than the sum of their parts.

Systems biology draws on several disciplines—biochemistry, structural, molecular, and cellular biology, mathematics, biocomputing, molecular imaging, engineering, and so on—to create algorithms for predicting how a biological system changes over time and under different conditions (health vs. illness, fasting vs. eating) and develop solutions for the most pressing health and environmental problems.

On a biological level, the human body consists of multiple networks that combine and communicate with each other at different scales. From our genome to the proteins and metabolites that make up the cells in our organs, we are fundamentally a network of interactive networks that can be defined with algorithms. Systems biology analyzes these networks through their numerous scales to include behavior on different levels, formulating hypotheses about biological functions and providing spatial and temporal knowledge of dynamic biological changes. Studying the complexity of biology requires more than understanding only one part of a system; we have to understand all of it.

The challenge faced by systems biology surpasses any other addressed by science in the past. Take the case of metabolism; the metabolic phenotypes of a cell, organ, or organism are the result of all the catalytic activities of enzymes (the proteins that specifically catalyze a metabolic biochemical reaction) established by kinetic properties, the concentration of substrates, products, cofactors, and so on, and of all the nonlinear regulatory interactions on a transcriptional level (the stage of genetic expression where the DNA sequence is copied in an RNA sequence), a translational level (the process that decodes an RNA sequence to generate a specific chain of amino acids and form a protein), a post-translational level (the covalent modifications that follow a protein’s synthesis to generate a great variety of structural and functional changes), and an allosteric level (a change in an enzyme’s structure and activity caused by a non-covalent union with another molecule located outside the chemically active center).

In other words, a detailed metabolic description requires knowledge not only of all the factors that influence the quantity and state of enzymes, but also the concentration of all the metabolites that regulate each reaction. Consequently, metabolic flows (the passage of all of the metabolites through all of a system’s enzymatic reactions over time) cannot be determined exclusively on the basis of the metabolite concentration, or only by knowing all of the enzymes’ nonlinear interactions.

Determining metabolic flows with a certain degree of confidence requires knowing the concentration of all involved metabolites and the quantities of protein in each of the involved enzymes, with their post-translational modifications, as well as a detailed computational model of the reactions they catalyze.

At the same time, a systems-biology approach is needed to integrate large amounts of heterogenous data—transcriptomics (the study of the complete set of RNA transcriptions), proteomics (the study of the complete set of proteins), metabolomics (the study of the complete set of metabolites), and fluxomics (the study in time of the destination of each metabolite and the analysis of the routes they take)—in order to construct an integral metabolic model.

The human genome is estimated to contain between 20,000 and 25,000 genes that codify proteins, of which around 3,000 are enzymes that can be divided into approximately 1,700 metabolic enzymes and 1,300 non-metabolic ones, including those responsible for the post-translational modifications of metabolic enzymes such as kinase proteins, which function as signal transducers to modify the kinetic properties of metabolic enzymes (hence the name kinase, which comes from kinetic) through phosphorylation (bringing phosphoric acid into a protein). These kinase proteins thus play a central role in regulating the metabolism. Metabolic enzymes have been assigned to some 140 different metabolic routes (the set of linked chemical reactions catalyzed by enzymes).

While this human metabolic map could be considered the best-characterized cellular network, it is still incomplete. There are a significant number of metabolites, enzymes, and metabolic routes that have yet to be well characterized or that are simply unknown, even in the oldest metabolic routes, such as glycolysis (the set of chemical reactions that break down certain sugars, including glucose, to obtain energy), the urea cycle (a cycle of five biochemical reactions that turn toxic ammonia into urea to be excreted in urine), and the synthesis of lipids; there are still many gaps in our knowledge.

Moreover, metabolic phenotypes are the product of interactions among a variety of external factors—diet and other lifestyle factors, preservatives, pollutants, environment, microbes—that must also be included in the model.

Systems biology draws on several disciplines—biochemistry, structural, molecular and cellular biology, mathematics, biocomputing, molecular imaging, and engineering—to create algorithms for predicting how a biological system changes over time and under different conditions

The complete collection of small molecules (metabolytes) found in the human body—lipids, amino acids, carbohydrates, nucleic acids, organic acids, biogenic amines, vitamins, minerals, food additives, drugs, cosmetics, pollutants, and any other small chemical substance that humans ingest, metabolize, or come into contact with—is unknown. Around 22,000 have been identified, but the human metabolism is estimated to contain over 80,000 metabolites.

Metabolism and Illness

The idea that illness produces changes in biological fluids, and that chemical patterns can be related to health, is very old. In the middle ages, a series of charts called “urine diagrams” linked the colors, smells, and tastes of urine—all stimuli of a metabolic nature—to diverse medical conditions. They were widely used during that period for diagnosing certain illnesses. If their urine tasted sweet, patients were diagnosed with diabetes, a term that means siphon and refers to the patient’s need to urinate frequently. In 1675, the word mellitus (honey) was added to indicate that their urine was sweet; and, in the nineteenth century, methods were developed for detecting the presence of glucose in diabetics’ blood. In 1922, Frederick Banting and his team used a pancreatic extract called “insulin” to successfully treat a patient with diabetes, and he was awarded the Nobel Prize in Medicine the following year. In 1921 Nicolae Paulescu had demonstrated the antidiabetic effect of a pancreatic extract he called “pancreine,” and he patented that discovery in 1922.

The reestablishment of metabolic homeostasis (the set of self-regulatory phenomena that lead to the maintenance of a consistent composition of metabolites and properties inside a cell, tissue, or organism) through substitution therapy has been successfully employed on numerous occasions. In Parkinson’s disease, for example, a reestablishment of dopamine levels through treatment with levodopa has an unquestionable therapeutic effect for which Arvid Carlsson was awarded the Nobel Prize in Medicine in 2000.

An interruption of metabolic flow, however, does not only affect the metabolites directly involved in the interrupted reaction; in a manner that resembles a river’s flow, it also produces accumulation of metabolites “upstream.” Phenylketonuria, for example, is a rare hereditary metabolic disease (diseases are considered rare when they affect less than one in two thousand people) caused by a phenylalanine hydroxylase deficiency (this enzyme converts phenylalanine into the amino acid called tyrosine) that produces an accumulation of phenylalanine in the blood and brain. At high concentrations, phenylalanine is toxic and causes grave and irreversible anomalies in the brain’s structure and functioning. Treatment in newborns affected by this congenital error of the metabolism with a phenylalanine-deficient diet prevents the development of this disease.

Another example is hereditary fructose intolerance (HFI), which appears as a result of a lack of aldolase B, an enzyme that plays a crucial role in the metabolization of fructose and gloconeogenesis—the metabolic route that leads to glucose synthesis from non-carbohydrate precursors. Persons with HFI develop liver and kidney dysfunctions after consuming fructose, which can lead to death, especially during infancy. The only treatment for this rare disease is the elimination of all dietary sources of fructose, including sacarose, fruit juices, asparagus, and peas.

When it is not possible to eliminate a nutrient from the diet to reestablish metabolic homeostasis, it is sometimes possible to design pharmacological chaperones: small molecules that associate with the mutant proteins to stabilize them so they will behave correctly. That is why they are called chaperones.

In the case of certain proteins, such as uroporphyrinogen III synthase, a key enzyme for synthesizing the hemo group (the prosthetic group that is part of numerous proteins, including hemoglobin) whose deficiency causes congenital erythropoietic porphyria, this therapeutic approach has been strikingly successful in an experimental model of the illness, thus supporting the idea that pharmacological chaperones may become powerful therapeutic tools.

Genetic Therapy and Genome Editing

In September 1990, the first genetic therapy (an experimental technique that uses genes to treat or prevent diseases) was carried out at the NIH to combat a four-year-old child’s deficiency of the adenosine deaminase enzyme (a rare hereditary disease that causes severe immunodeficiency). In 2016, the European Medicines Agency (EMA) recommended the use of this therapy in children with ADA when they cannot be coupled with a matching bone-marrow donor. While genetic therapy is a promising treatment for various diseases (including hereditary diseases, certain types of cancer, and some viral infections), it continues to be risky thirty years after the first clinical studies, and research continues to make it an effective and safe technique. Currently, it is only being used for illnesses that have no other treatment.

Genome editing is another very interesting technique for preventing or treating illnesses. It is a group of technologies that make it possible to add, eliminate, or alter genetic material at specific locations in the genome. Various approaches to genome editing have been developed. In August 2018, the US Food and Drug Administration agency (FDA) approved the first therapy based on RNA interference (RNAi), a technique discovered twenty years ago that can be used to “silence” the genes responsible for specific diseases. The most recent is known as CRISPR-Cas9, and research is under way to test its application to rare hereditary diseases such as cystic fibrosis or hemophilia, as well as for the treatment and prevention of complex diseases such as cancer, heart disease, or mental illness. Like genetic therapy and RNAi, the medical application of genetic therapy still involves risks that will take time to eliminate.

Metabolic Transformation

Referring to philology, Friedrich Nietzsche said: “[It] is that venerable art which exacts from its followers one thing above all—to step to one side, to leave themselves spare moments, to grow silent, to become slow—the leisurely art of the goldsmith applied to language: an art which must carry out slow, fine work, and attains nothing if not lento.”5 Replacing goldsmith with smith of the scientific method will suffice to make this striking observation by Nietzsche applicable to biomedical research. In science, seeking only what is useful quashes the imagination.

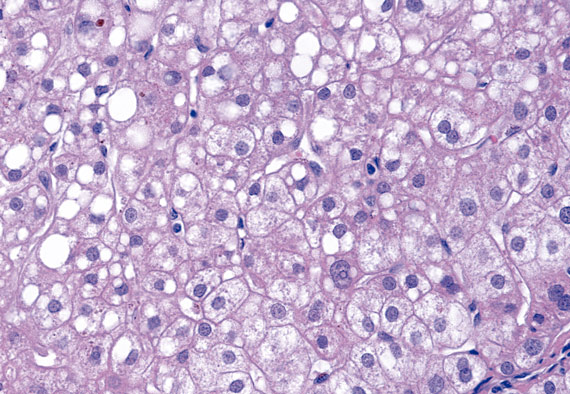

In the 1920s, Otto Warburg and his team noticed that, compared to the surrounding tissue, tumors consumed enormous quantities of glucose. They also observed that glucose was metabolized to produce lactate as an energy source (ATP), rather than CO2, even when there was enough oxygen for breathing. That process is known as anaerobic glycolysis (none of the nine metabolic routes that join glucose with lactate employs oxygen). This change from aerobic to anaerobic glycolysis in tumors is known as the “Warburg effect” and constitutes a metabolic adaptation that favors the growth of tumor cells. These cells use lactate’s three carbon atoms to synthesize the “basic materials” that make up cells (amino acids, proteins, lipids, nucleic acids, and so on) and generate mass.

We now know that while tumors as a whole exhibit a vast landscape of genetic changes, neoplastic cells exhibit a shared phenotype characterized by disorderly growth that maintains their invasive and lethal potential. Sustaining this unceasing cellular division requires metabolic adaptation that favors the tumor’s survival and destroys normal cells. That adaptation is known as “metabolic transformation.” In fact, although cellular transformation in different types of tumors arises in a variety of ways, the metabolic requirements of the resulting tumor cells are quite similar: cancer cells need to generate energy (ATP), to synthesize “basic materials” to sustain cellular growth and to balance the oxidative stress produced by their ceaseless growth.

There is currently considerable interest in dissecting the mechanisms and impact of tumors’ metabolic transformation to discover therapies that could block such adaptations and effectively brake cancer growth and metastasis

Due to these shared requirements there is currently considerable interest in dissecting the mechanisms and impact of tumors’ metabolic transformation to discover therapies that could block such adaptations and effectively brake cancer growth and metastasis.

In 1948, Sidney Farber and his team obtained remissions in various children with acute undifferentiated leukemia using aminopterin, an inhibitor of the dihydrofolate reductase enzyme that catalyzes a reaction needed for synthesizing DNA. This molecule was the forerunner of methotrexate, a cancer-treatment drug in common use today.

The age of chemotherapy began with the modification of tumors’ metabolisms. Since then, other researchers have discovered molecules capable of slowing the growth and metastasis of various types of cancer, thus reducing annual mortality rates in the United States to an average of 1.4 percent for women and 1.8 percent for men between 1999 and 2015 (Europe shows similar statistics).

In 1976, after analyzing 6,000 fungus samples, Akira Endo isolated mevastatin, a molecule capable of blocking the activity of an enzyme called 3-hydroxy-3-methylglutaryl-coenzyme a reductase, which catalyzes the reaction that limits the synthesis of colesterol. Mevastatin was the predecessor of lovastatin, a drug in common use since 1984 to treat hypercholesterolemia. Since then, other more efficient and safer statins have been discovered.

The reduction of cholesterol buildups with statins has an unquestionable therapeutic effect on the appearance of cardiovascular incidents. It is one of the principal factors contributing to an over fifty percent drop in the number of deaths from cardiovascular illness and strokes in Western countries over the last few decades. The other two are medication for high blood pressure (a metabolic illness resulting from an imbalance in the homeostasis of water and electrolytes, mainly sodium and potassium) and for diabetes.

Conclusions

An increase of three decades in the life expectancy of persons born in numerous countries and regions of the West during the twentieth century is the clearest exponent of the social value of science and the triumph of public-health policies over illness: the discovery of vitamins and insulin, the development of vaccines, antibiotics, X-rays, open-heart surgery, chemotherapy, antihypertensive drugs, imaging techniques, antidiabetic drugs, statins, antiviral treatment, immunotherapy, and so on, are examples of the productive collaboration between academia and industry. In 2015, the United Nations took advantage of advances in the Millennium Development Goals to adopt the Sustainable Development Goals, which include the commitment to attain universal health coverage by 2030. However, there are still enormous differences between what can be achieved in health care, and what has actually been attained. Progress has been incomplete and unequal. Meeting this goal requires a full and continuous effort to improve the quality of medical and health-care services worldwide.

Could the process of transforming health knowledge somehow be speeded up? In 1996, James Allison observed that rats treated with an anti-CTLA-4 antibody (a receptor that negatively regulates the response of T lymphocytes) rejected those tumors. Clinical studies began in 2001 and in 2011 the FDA approved this procedure for treating metastatic melanoma, making it the first oncological drug based on activating the immune system. For this work, Allison was awarded the 2018 Nobel Prize for Medicine.

Five years to complete preclinical studies and ten more for clinical trials may seem like a long time; the former answers basic questions about a drug’s way of functioning and its safety, although that does not replace studies on how a given drug will interact with the human body. In fact, clinical trials are carried out in stages strictly regulated by drug agencies (EMA, FDA) from initial phase 1 and phase 2 small-scale trials through phase 3 and phase 4 large-scale trials.

Complex design, increased numbers of patients, criteria for inclusion, safety, criteria for demonstrating efficacy, and longer treatment periods are the main reasons behind the longer duration of clinical trials.

It is no exaggeration to state that maintaining the status quo hinders the development of new drugs, especially in the most needy therapeutic areas. Patients, doctors, researchers, industry, and regulatory agencies must reach agreements that accelerate the progress and precision of treatment. Moreover, the pharmaceutical industry needs to think more carefully about whether the high cost of new treatments puts them out of reach for a significant portion of the general public, with the consequent negative repercussions on quality of life and longevity. Science is a social work.

Notes

Comments on this publication