Machines, both analog and digital, have been used over time to help workplace designers calculate outputs of work and, indeed, to replace work through automation, now, via the integration of artificial intelligence (AI) tools and applications. What types of “intelligence” are expected from technologies? How does management use personal data acquired by machines and make assumptions of respective types of intelligence? Data has been gathered from job candidates’ and workers’ activities over time, where even physical movements and sentiments, as well as precise social media use, are tracked. When “big data” is big enough, it is used to train algorithms that predict talents and capabilities; monitor performance; set and assess work outputs; link workers to clients; judge states of being and emotions; provide modular training on the factory floor; look for patterns across workforces; and more. How does AI become central to this process of decision-making? In this context, what risks do workers face today in the digitalized, AI-augmented workplace?

Workers have always faced worker tracking and performance monitoring where an overarching business profit motive dominates the terms of the employment relationship, and workers want a decent and enjoyable life, paid for by their work and commitment to their employer and wage provider. Today, however, the employment relationship is changing, and there is a new type of “actor” in the workplace. Machines, both analog and digital, have been used over time to help workplace designers calculate outputs of work and, indeed, to replace work through automation; now, via the integration of artificial intelligence (AI) tools and applications, some machines have new responsibilities and even autonomy, as well as being expected to display various forms of human intelligence and make decisions about workers themselves.

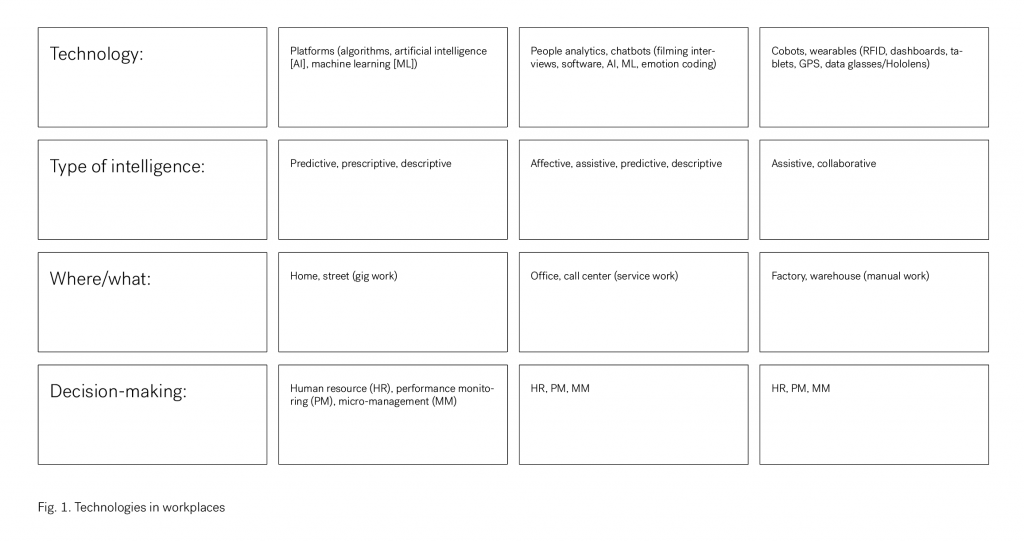

Figure 1 outlines where, and how, new technologies are being implemented into workplaces; the types of “intelligence” which are expected from these technologies; and then, the precise ways that management uses the data produced by such technological processes with the assumptions of respective types of intelligence. There are a number of ways that the newest technologies are being used by management as AI takes center stage. Data has been, and is being, accumulated from job candidates’ and workers’ activities over time, from telephone calls, computer use, swiping in and out with “smart cards,” and up to today, where even physical movements and sentiments, as well as precise social media use, are tracked and monitored.

For human resources, called “big data” when reaching a large enough volume, collections of data are being used to train algorithms that predict job candidates and workers’ talents and capabilities; monitor, gage, and encourage performance; set and assess work outputs; link workers to clients; judge states of being and emotions; provide modular training on the factory floor; look for patterns across workforces of, for example, sickness; and much more.

This chapter, in line with these developments, outlines how AI is increasingly part of the process of decision-making and identifies the risks that workers face today, which should be acknowledged and recognized by policy-makers and management stakeholders alike.

1. People Analytics: Human Capital Management and Performance Monitoring

AI is seen today as the most innovative and promising arena for workplace and workforce management. Forty percent of human resources (HR) functions being applied across the world in companies small and large are now using AI-augmented applications. These companies are mostly based in the USA, but some European and Asian organizations are also coming on board. A PricewaterhouseCoopers survey shows that more and more global businesses are beginning to see the value of AI in supporting workforce management (PwC, 2018). It is claimed furthermore that 32% of personnel departments in tech companies and others are redesigning organizations with the help of AI to optimize “for adaptability and learning to best integrate the insights garnered from employee feedback and technology” (Kar, 2018). A recent IBM report (IBM, 2018) shows that half of chief HR officers identified for the study anticipate and recognize the potentials for technology in HR surrounding operations and the acquisition and development of talent. A Deloitte report shows that 71% of international companies consider people analytics a high priority for their organizations (Collins, Fineman, and Tsuchida, 2017) because it should allow organizations to not only provide good business insights but also deal with what has been called the “people problem.”

“People problems” are also called “people risks” (Houghton and Green, 2018). These have several dimensions, outlined in a Chartered Institute for Personnel Development (CIPD) report as involving:

- talent management;

- health and safety;

- employee ethics;

- diversity and equality;

- employee relations;

- business continuity;

- reputational risk (Houghton and Green, 2018).

“People analytics” is an increasingly popular HR practice, where big data and digital tools are used to “measure, report, and understand employee performance, aspects of workforce planning, talent management, and operational management” (Collins, Fineman, and Tsuchida, 2017). Every sector and organization require HR, which is responsible for everything from recruitment activity to preparing employment contracts and managing the relationship between workers and employers.

Clearly there is some discrepancy in the role of HR, where some argue its function is only bureaucratic, while others claim that it should play a prominent role in business operations and execution. People analytics practices are part of both levels of HR, where computerization, data gathering and monitoring tools allow organizations to conduct “real-time analytics at the point of need in the business process … [and allow] for a deeper understanding of issues and actionable insights for the business” (ibid.). Prediction algorithms applied for these processes often reside in a “black box” (Pasquale, 2015), where people do not fully understand how they work, but even so, computer programs are given the authority to make “prediction by exception” (Agarwal et al., 2018). “Prediction by exception” refers to processes whereby computers deal with large data sets and are able to make reliable predictions based on routine and regular data, but also to spot outliers and even send notifications “telling” the user that checks should be done, or whether human assistance or intervention should be provided.

People analytics is an increasingly popular HR practice that uses big data and digital tools to “measure, report, and understand employee performance, aspects of workforce planning, talent management, and operational management”

Also called “human analytics,” “talent analytics,” and “human resource analytics,” people analytics are defined broadly as the use of individualized data about people to help management and HR professionals make decisions about recruitment, that is, who to hire; in worker appraisals and promotion considerations; to identify when people are likely to leave their jobs; and to select future leaders. People analytics are also used to manage workers’ performance. First, this section looks at the human capital management aspects of people analytics, where recruitment and talent prediction occur. Second, performance management with the use of people analytics is outlined.

1.1 Human Capital Management

AI-enhanced HR practices can help managers obtain seemingly objective wisdom about people even before they hire them, as long as management has access to data about prospective workers, which has significant implications for tailoring worker protections and preventing occupational, safety, and health (OSH) risks at the individual level. Ideally, people analytics tools can aid employers to make good decisions about workers. Indeed, algorithmic decision-making in people analytics could be used to support workforces by aligning employee performance feedback and performance pay, and workforce costs, with business strategy and support for specific workers (Aral et al., 2012, cited in Houghton and Green, 2018, p. 5). Workers should be personally empowered through having access to new forms of data that help them to identify areas of improvement, stimulate personal development, and achieve higher engagement.

There is some discrepancy as to the role of HR. While some argue its function is only bureaucratic, others claim that it should play a prominent role in business operations and execution

Another form of people analytics involves filmed job interviews, where AI is used to judge both verbal and nonverbal cues. One such product is made by a group called HireVue and is used by over 600 companies. This practice is carried out by organizations including Nike, Unilever, and Atlantic Public Schools, who are using products that allow employers to interview candidates on camera. The aim is to reduce bias that can come about if, for example, an interviewee’s energy levels are low, or if the hiring manager has more affinity to the interview based on similarity, for example, age, race, and related demographics. However, evidence has already emerged that preferences from previous hiring managers are reflected in hiring, and heterosexual white men are, a report by Business Insider reveals, the hiring preference ceteris paribus (Feloni, 2017). If data provided to an algorithm reflects the dominant bias reflected over time, then it may score someone with “in-group” facial expressions higher and rate other cues tied to sexual orientation, age, and gender that do not resemble a white male, lower.

1.2 Performance Management

While performance management is seen in most workplaces, there are hundreds of methods that have been tried and tested over many years. Perhaps the best-known era when performance management began to use technology to make decisions about workers’ performance in the industrializing world was the period of scientific management. The well-known industrialists Taylor and the Gilbreths devised schemes to understand workplace productivity as linked to specific, measured human actions in the workplace. These industrialists searched for scientific methods to identify and depict perfect bodily movements for ideal productive behaviors through technologically informed work designs.

In 1927 the League of Nations published papers from the 1927 International Economic Conference entitled “Scientific Management in Europe.” This report was printed in the interwar period, when nations were furiously seeking to set up interdependent organizations and establish a climate of cooperation to reduce the chances for any further wars. Interestingly, a standardization of industrial practices was advocated in this report, and scientific management was heralded as a field “par excellence for international cooperation.” Indeed, at the conference, scientific management was defined as:

…the science which studies the relations between the different factors in production, and especially those between the human and the mechanical factors. Its object is to obtain, by the rational utilization of these various factors, the optimum output.

So, Taylorism was not only a project of worker performance management but had a larger remit and ideology. The International Labour Office reported that scientific management had already “overflowed the limits within which it was originally applied by Taylor” and its recommendations and practices “now cover all departments of the factory, all forms of manufacture, all forms of economic activity, banking, commerce, agriculture and the administration of public services.”

Looking at micro-movements by using a series of technological devices including a spring-driven camera, an electric motor-driven camera, and a microchronometer, which was an instrument for measuring very small intervals of time, these scientists looked for the hoped “best way” to carry out work in bricklaying and in steel factories. The Gilbreths also measured workers’ heart rates using a stethoscope and stopwatch—a foreshadowing of the heart rate measurements seen in fitness armbands that are increasingly being used in workplace initiatives today (Moore, 2018a).

There is a large literature about performance management, perhaps beginning with scientific management, that emerged from various disciplines, from organizational psychology, sociology, sociology of work, and critical management studies, in which researchers looked at the ways organizations try to balance productivity with the management of workers’ activities and to organize various mechanisms that surround these processes.

The school of human relations followed scientific management, followed by systems rationalism in which “operations research” dominated, followed by the organizational culture and quality period of work design history, and now the era I have called agility management systems (Moore, 2018a). Each period of work design history involves attempts to identify the “best” logic of calculation, where performance management (PM) is a calculative practice that is also institutionally embedded and socially transformative. Increasingly, ways to calculate workers’ behaviors are founded in a neoliberal economic rationality.

Economic practices of calculation create markets (Porter, 1995) and enter organizations with a logic of value calculation, which, in turn, shapes the organization as well as requires “responsibility from individuals rendered calculable and comparable” (Miller and O’Leary, 1987). Through quantification, the designer of a PM system decides what will be considered calculable and comparable. While there are assumptions of the “bottom line,” productivity and efficiency do not hold an automatic link to workers’ safety and health, contract and livelihood protections. Any time there is a method designed to characterize a person, that is, the ideal worker with the best performance scores, we are making people up (Hacking, 1986). The enumeration of characteristics then allows for the generation of statistics which function as specified calculus that are seemingly neutral, docile, and immune to query. Desrosières indicates that “placing acts, diseases, and achievements in classes of equivalence… then shape how the bearer is treated” (2001, p. 246). Rose stated that “numbers, like other ‘inscription devices,’ actually constitute the domains they appear to represent; they render them representable in a docile form—a form amenable to the application of calculation and deliberation” (Rose, 1999, p. 198, cited in Redden, 2019, pp. 40–41). Despite the range of arguments about what should be measured, too little research has focused on how decisions are taken in determining what work characteristics and factories are seen as worthy of measure.

OSH risks

If processes of algorithmic decision-making in people analytics and performance management do not involve human intervention and ethical consideration, these human resource tools could expose workers to heightened structural, physical, and psychosocial risks and stress. How can workers be sure decisions are being made fairly, accurately, and honestly if they do not have access to the data that is held and used by their employer? OSH risks of stress and anxiety arise if workers feel that decisions are being made based on numbers and data that they have no access to, nor power over. This is particularly worrying if people analytics data leads to workplace restructuring, job replacement, job description changes, and the like. People analytics are likely to increase workers’ stress if data is used in appraisals and performance management without due diligence in process and implementation, leading to questions about micromanagement and feeling “spied on.” If workers know their data is being read for talent spotting or for deciding possible layoffs, they may feel pressured to advance their worker performance, and begin to overwork, posing OSH risks. Another risk arises with liability, where companies’ claims about predictive capacities may later be queried for accuracy or personnel departments held accountable for discrimination.

One worker liaison expert indicated1 that worker data collection for decision-making, such as seen in people analytics, has created the most urgent issues arising with AI in workplaces. Often, works councils are not aware of the possible uses of such management tools. Or, systems are being put into place without consultation with works councils and workers. Even more OSH risks arise, such as worker stress and job losses, when the implementation of technologies is done in haste and without appropriate consultation and training, or communication. In this context it is interesting to mention a project run at the headquarters of IG Metall, in which the workplace training curricula are being reviewed in 2019, in the context of Industrie 4.0.2 Findings demonstrate that training needs updating not only to prepare workers for physical risks, as has been standard in heavy industry OSH training, but also for mental and psychosocial risks introduced by digitalization at work, which includes people analytics applications.3

2. Cobots and Chatbots

2.1 Cobots

Having visited several car factories and technology centers, I have seen the huge orange robot arms in factories whirring away in expansive warehouses in industrial landscapes, building car parts and assembling cars where conveyor belts lined with humans once stood. Robots have directly replaced workers on the assembly line in factories in many cases, and sometimes, AI is confused with automation. Automation in its pure sense involves, for example, the explicit replacement of a human’s arm for a robot arm. Lower-skilled, manual work has historically been most at risk and is still at a high risk of automation. Now, automation can be augmented with autonomous machine behavior or “thinking.” So, the AI dimension of automation reflects where workers’ brains, as well as their limbs, may no longer be needed. Now, as one EU-OSHA review on the future of work regarding robots and work indicates, while robots were at first built to carry out simple tasks, they are increasingly enhanced with AI capabilities and are being “built to think, using AI” (Kaivo-oja, 2015).

The AI dimension of automation shows that, in some cases, workers’ brains, as well as their limbs, may no longer be needed

Today, cobots are being integrated into factories and warehouses where they work alongside people in a collaborative way. They assist with an increasing range of tasks, rather than necessarily automating entire jobs. Amazon has 100,000 AI-augmented cobots, which has shortened the need for training workers to less than two days. Airbus and Nissan are using cobots to speed up production and increase efficiency.

2.2 Chatbots

Chatbots are another AI-enhanced tool which can deal with a high percentage of basic customer service queries, freeing up humans working in call centers to deal with more complex questions. Chatbots work alongside people, not only in the physical sense but within the back-end of systems; they are implemented to deal with customer queries over the phone.

For example, Dixons Carphone uses a conversational chatbot now named Cami which can respond to first-level consumer questions on the Curry website and through Facebook messenger. Insurance company Nuance launched a chatbot named Nina to respond to questions and access documentation in 2017. Morgan Stanley have provided 16,000 financial advisers with machine-learning algorithms to automate routine tasks. Call-center workers already face extensive OSH risks because of the nature of the work, which is repetitive and demanding and subject to high rates of micro-surveillance and extreme forms of measure (Woodcock, 2016).

Chatbots pose psychosocial risks around fears of job loss and replacement. Workers should be trained to understand the role and function of workplace bots and to know what their contributions are

An increasing number of activities are already recorded and measured in call centers. Words used in e-mails or stated vocally can be data-mined to determine workers’ moods, a process called “sentiment analysis.” Facial expressions likewise can be analyzed to spot signs of fatigue and moods that could lead to making poor judgments and thus lower OSH risks emerging with overwork. But chatbots, while designed to be assistive machines, still pose psychosocial risks around fears of job loss and replacement. Workers should be trained to understand the role and function of workplace bots and to know what their collaborative and assistive contributions are.

OSH risks

Cobots can reduce OSH risks as they allow AI systems to carry out other types of mundane and routine service tasks in factories which historically create stress, overwork, musculoskeletal difficulties, and even boredom of repetitive work for people.

In EU-OSHA’s “Foresight on New and Emerging Occupational Safety and Health Risks Associated with Digitalization by 2025” (EU-OSHA, 2018) report, it is indicated that robots allow people to be removed from dangerous physical work and environments with chemical and ergonomic hazards, thus reducing OSH risks for workers (p. 89).

In a study of online gig workers, what Jeff Bezos called “humans-as-a-service” is critiqued for being the kind of work that dehumanizes and devalues work, facilitates the casualization of workers, and even informalizes the economy

As a recent Netherlands Organization for Applied Scientific Research (TNO) report states, there are three types of OSH risks in human/cobot/environment interactions:

- Robot/human collision risks, where machine learning can lead to unpredictable robot behavior;

- Security risks, where robots’ Internet links can affect the integrity of software programming, leading to vulnerabilities in security;

- Environmental, where sensor degradation and unexpected human action, in unstructured environments can lead to environmental risks (TNO, 2018, pp. 18–19).

AI-permitted pattern and voice recognition and machine vision mean that not only non-skilled jobs are at risk of replacement, but now, a range of nonroutine and non-repetitive jobs can be carried out by cobots and other applications and tools. In that light, AI-enhanced automation enables many more aspects of work to be done by computers and other machines (Frey and Osborne, 2013). One example of the protection of workplace OSH via AI-augmented tools is found in a chemicals company that makes optical parts for machines. The minuscule chips that are produced need to be scanned for mistakes. Previously, one person’s job was to detect mistakes with their own eyes, sitting, immobile, in front of repeated images of chips for several hours at a time. Now, AI has fully replaced this task. The OSH risks, which have now been, of course, eliminated, include musculoskeletal difficulties and eye strain and damage.4

However, AI-augmented robots in factories and warehouses create stress and a range of serious problems if they are not implemented appropriately. Indeed, one UK-based trade unionist indicated that digitalization, automation, and algorithmic management, when “used in combination… are toxic and are designed to strip millions of folks of basic rights.”5 Potential OSH issues may also include psychosocial risk factors if people are driven to work at a cobot’s pace (rather than the cobot working at a person’s pace); and collisions between a cobot and a person.6 Another cobot-related case of machine/human interaction creating new working conditions and OSH risks is where one person is assigned to “look after” one machine and is sent notifications and status updates about machines on personal devices like a smartphone or a home laptop. This can lead to risks of overwork, where workers feel responsible to take note of notifications in out-of-work hours, where a work/life balance is disrupted.7

One expert8 in AI and work discussed developments around the Internet of Things (IoT) in workplaces, where machine-to-machine connected systems work alongside human labor in factories and warehouses. Data-input problems, inaccuracies, and faults with machine-to-machine systems create significant OSH risks as well as liability questions. Indeed, sensors, software, and connectivity can be faulty and unstable, and all vulnerabilities introduce questions about who is legally responsible for any damage that emerges. Is it a cobot’s fault if it runs into a worker; the worker’s fault; the company who manufactured the cobot originally; or the company that is employing the worker and integrating the cobot? The complexities abound.

Human-robot interaction creates both OSH risks and benefits in the physical, cognitive, and social realm, but cobots may someday have the competences to reason, and must make humans feel safe. To achieve this, cobots must demonstrate perception of objects versus humans, and the ability to predict collisions, adapt behavior appropriately, and demonstrate sufficient memory to facilitate machine learning and decision-making autonomy (TNO, 2018, p. 16) along the lines of the previously explained definitions of AI.

3. Wearable Technologies

Wearable self-tracking devices are increasingly seen in workplaces. The market for wearable devices in industrial and health-care wearables has been predicted to grow from USD 21 million in 2013 to USD 9.2 billion by 2020 (Nield, 2014). From 2014 to 2019, an increase of thirteen million fitness devices were predicted to become incorporated into workplaces. This is already happening in warehouses and factories where GPS, RFID, and now haptic sensing armbands—such as the one patented by Amazon in 2018—have entirely replaced the use of clipboards and pencils.

One new feature of automation and Industrie 4.0 processes where AI-enhanced automation is underway is in the area of lot size manufacturing.9 This process involves cases in which workers are provided with glasses with screens and virtual reality functionality, like HoloLenses and Google glasses, or computer tablets on stands within the production line which are used to carry out on-the-spot tasks in production lines. The assembly line model has not disappeared completely, where a worker carries out one repeated, specific task for several hours at a time, but the lot size method is different. Used in agile manufacturing strategies, this method involves smaller orders made within specific time parameters, rather than constant bulk production that does not involve guaranteed customers.

Workers are provided with visual on-the-spot training enabled by a HoloLens screen or tablet and carry out a new task which is learned instantly and only carried out for the period of time required to manufacture the specific order a factory receives. While, at first glance, these assistance systems may appear to provide increased autonomy, personal responsibility, and self-development, that is not necessarily the case (Butollo, Jürgens, and Krzywdzinski, 2018).

The use of on-the-spot training devices, worn or otherwise, means that workers need less preexisting knowledge or training because they carry out the work case by case. The risk of work intensification thus arises, as head-mounted displays or tablet computers become akin to live instructors for unskilled workers. Furthermore, workers do not learn long-term skills because they are required to perform on-the-spot, modular activities in custom-assembly processes, needed to build tailor-made items at various scales. While this is good for the company’s efficiency in production, lot size methods have led to significant OSH risks in that they de-skill workers; skilled labor is only needed to design the on-the-spot training programs used by workers who no longer need to specialize themselves.

OSH risks

OSH risks can further emerge because of the lack of communications, where workers are not able to comprehend the complexity of the new technology quickly enough and particularly if they are also not trained to prepare for any arising hazards. One real issue is in the area of small businesses and start-ups, which are quite experimental in the use of new technologies and often overlook ensuring that safety standards are carried out before accidents occur, when it is, of course, too late.10 An interview with those involved in the IG Metall Better Work 2020 project (Bezirksleitung Nordrhein-Westfalen/NRW Projekt Arbeit 2020) revealed that trade unionists are actively speaking to companies about the ways they are introducing Industrie 4.0 technologies into workplaces (Moore, 2018b). The introduction of robots and worker monitoring, cloud computing, machine-to-machine communications, and other systems, have all prompted those running the IG Metall project to ask companies:

– what impact will technological changes have on people’s workloads?

– is work going to be easier or harder?

– will work become more or less stressful?

– will there be more or less work?

The IG Metall trade unionists indicated that workers’ stress levels tended to rise when technologies are implemented without enough training or worker dialog. Expertise is often needed to mitigate risks of dangerous circumstances that new technologies in workplaces introduce.

4. Gig Work

Next, we turn to another arena in which AI is making an impact, the “gig work” environments.

“Gig work” is obtained by using online applications (apps), also called platforms, made available by companies such as Uber, Upwork, or Amazon Mechanical Turk (AMT). The work can be performed online—obtained and carried out on computers in homes, libraries, and cafes, for example, and includes translation and design work—or offline—obtained online but carried out offline, such as taxi driving or cleaning work. Not all algorithms utilize AI, but the data produced by client-worker matching services and customer assessment of platform workers provide data that train profiles that then result in overall higher or lower scores that then lead, for example, clients to select specific people for work over others.

Monitoring and tracking have been daily experiences for couriers and taxi drivers for many years, but the rise in offline gig workers carrying out platform-directed food delivery by bicycle, delivering orders, and taxi services is relatively new. Uber and Deliveroo require workers to install a specific application onto their phones, which perch on vehicle dashboards or handlebars, and they gain clients through the use of mapping satellite technologies and by matching algorithmically operated software. The benefits of using AI in gig work could be driver and passenger protection. DiDi, a Chinese ride-hailing service, uses AI facial-recognition software to identify workers as they log on to the application. DiDi uses this information to ensure the identities of drivers, which is seen as a method of crime prevention. However, there was a very serious recent failure in the use of the technology in which a driver logged in as his father one evening. Under the false identity, later in his shift, the driver killed a passenger.

Delivery gig workers are held accountable for their speed, number of deliveries per hour, and customer rankings in an intensified environment that has been proven to create OSH risks. In Harper’s Magazine a driver explains how new digitalized tools work as a “mental whip,” noting that “people get intimidated and work faster” (The Week, 2015). Drivers and riders are at risk of deactivation from the app if their customer rankings are not high enough or they do not meet other requirements. This results in OSH risks including blatant unfair treatment, stress, and even fear.

Algorithms are used to match clients with workers in online gig work (also called microwork). One platform called BoonTech uses IBM Watson AI Personality Insights to match clients and online gig workers, such as those gaining contracts using AMT and Upwork. Issues of discrimination have emerged that are related to women’s domestic responsibilities, when carrying out online gig work at home, such as reproductive and caring activities in a traditional context. A recent survey of online gig workers in the developing world conducted by ILO researchers shows that a higher percentage of women than men tend to “prefer to work at home” (Rani and Furrer, 2017, p. 14). Rani and Furrer’s research shows that 32% of female workers in African countries have small children and 42% in Latin America. This results in a double burden for women, who “spend about 25.8 hours working on platforms in a week, 20 hours of which is paid work and 5.8 hours considered unpaid work” (ibid., p. 13). The survey shows that 51% of women gig workers work during the night (22.00 to 05.00) and 76% work in the evening (from 18.00 to 22.00), which are “unsocial working hours” according to the ILO’s risk categories for potential work-related violence and harassment (ILO, 2016, p. 40). Rani and Furrer further state that the global outsourcing of work through platforms has effectively led to the development of a “twenty-four hour economy … eroding the fixed boundaries between home and work … [which further] puts a double burden on women, since responsibilities at home are unevenly distributed between sexes” (2017, p. 13). Working from home could already be a risky environment for women who may be subject to domestic violence alongside the lack of legal protection provided in office-based work. Indeed, “violence and harassment can occur … via technology that blurs the lines between workplaces, ‘domestic’ places and public spaces” (ILO, 2017, p. 97).

OSH risks

Digitalizing nonstandard work such as home-based online gig work, and taxi and delivery services in offline gig work, is a method of workplace governance that is based on quantification of tasks at a minutely granular level, where only explicit contact time is paid. Digitalization may appear to formalize a labor market in the ILO sense, but the risk of underemployment and underpay is very real. In terms of working time, preparatory work for reputation improvement and necessary skills development in online gig work is unpaid. Surveillance is normalized but stress still results. D’Cruz and Noronha (2016) present a case study of online gig workers in India, in which “humans-as-a-service” (as articulated by Jeff Bezos; see Prassl, 2018) is critiqued for being the kind of work that dehumanizes and devalues work, facilitates casualization of workers, and even informalizes the economy. Online gig work, such as work obtained and delivered using the AMT, relies on nonstandard forms of employment which increases the possibilities for child labor, forced labor, and discrimination. There is evidence of racism, whereby clients are reported to direct abusive and offensive comments on the platforms. Inter-worker racist behavior is also evident: gig workers working in more advanced economies blame Indian counterparts for undercutting prices. Further, some of the work obtained on online platforms is highly unpleasant, such as the work carried out by content moderators who sift through large sets of images and are required to eliminate offensive or disturbing images, with very little relief or protection around this. There are clear risks of OSH violations in the areas of heightened psychosocial violence and stress, discrimination, racism, bullying, unfree and underage labor because of the lack of basic protection in these working environments.

There are benefits from integrating AI into gig work, including driver identity protection and allowing flexible hours of work. But there are also risks, such as a double burden of work for women online workers

In gig work, workers have been forced to register as self-employed workers, losing out on the basic rights that formal workers enjoy, such as guaranteed hours, sick and holiday pay, and the right to join a union. Gig workers’ online reputations are very important because a good reputation is the way to gain more work. As mentioned above, digitalized customer and client ratings and reviews are key to developing a good reputation and these ratings determine how much work gig workers obtain. Algorithms learn from customer rankings and quantity of tasks accepted, which produces specific types of profiles for workers that are usually publicly available. Customer rankings are deaf and blind to the consideration of workers’ physical health, care and domestic work responsibilities, and circumstances outside workers’ control that might affect their performance, leading to further OSH risks where people feel forced to accept more work than is healthy, or are at risk of work exclusion. Customer satisfaction rankings, and number of jobs accepted, can be used to “deactivate” taxi drivers’ use of the platform, as is done by Uber, despite the paradox and fiction that algorithms are absent of “human bias” (Frey and Osborne, 2013, p. 18).

Overall, while there are benefits from integrating AI into gig work, including driver identity protection and allowing flexible hours of work, good for people’s life and work choices, these same benefits can result in rising risks, such as the case of the DiDi driver and the case of a double burden of work for women online workers. OSH protections are generally scarce in these working environments and the risks are many (Huws, 2015; Degryse, 2016) and involve low pay and long hours (Berg, 2016), endemic lack of training (CIPD, 2017), and a high level of insecurity (Taylor, 2017). Jimenez (2016) warns that labor and OSH laws have not adapted to the emergence of digitalized work, and other studies are beginning to make similar claims (Degryse, 2016). The successes of AI are also its failures.

5. Toward a Conclusion

The difference with AI and other forms of technological development and invention for workplace usage is that because of the intelligence projected onto autonomous machines they are increasingly treated as decision-makers and management tools themselves, thanks to their seemingly superior capacity to calculate and measure. Where many recent reports on AI try to deal with the questions of “what can be done” or “how can AI be implemented ethically,” the issue is greater. A move to a reliance on machine calculation for workplace intelligent decision-making actually introduces extensive problems for any discussion of “ethics” in AI implementation and use.

In Locke’s “An Essay Concerning Human Understanding,” this empiricist philosopher wrote that ethics can be defined as “the seeking out [of] those Rules, and Measures of humane Actions, which lead to Happiness, and the Means to practice them” (Essay, IV.xxi.3, 1824, p. 1689). This is, of course, just one quote, by one ethics philosopher, but it is worth noting that the seeking out of and setting such rules, as are the parameters for ethics depiction, has only been carried out and conducted, so far, by humans. When we introduce the machine as an agent for rule setting, as AI does, the entire concept of ethics falls under scrutiny. Rather than talking about how to implement AI without the risk of death, business collapse, or legal battles, which are effectively the underlying concerns that drive ethics in AI discussions today, it would make sense to rewind the discussions and focus on the question: why implement AI at all? Will the introduction of AI into various institutions and workplaces across society really lead to prosperous, thriving societies as is being touted? Or will it deplete material conditions for workers and promote a kind of intelligence that is not oriented toward, for example, a thriving welfare state, good working conditions, or qualitative experiences of work and life?

Rather than talking about how to implement AI without the risk of death, business collapse, or legal battles, it would make sense to rewind the discussions and focus on the question: will the introduction of AI into various institutions and workplaces lead to prosperous, thriving societies?

While machines have more memory and processing power than ever before, which is how they can participate in machine learning, they lack empathy and full historical knowledge or cultural context within which work happens. Machines cannot intentionally discriminate, but if workplace decisions have been discriminatory (that is, more men or white people have been hired over time than others; more women or people of color have been fired and not promoted than others, and so on), then the data that is collected about hiring practices will itself be discriminatory. The paradox is that if this data is used to train algorithms to make further hiring/firing decisions, then, obviously, the decisions will show discrimination. Machines, regardless of what forms of intelligence management attributes to them, do not, and cannot see the qualitative aspects of life, nor the surrounding context. Cathy O’Neil, author of Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy, made an insightful observation in a recent interview with the current author. While watching Deliveroo riders hurtle past her in the rain, Dr. O’Neil considered the platforms directing the riders’ work, which operate on the basis of efficiency and speed and thus instigate riders to cycle in unsafe weather conditions. This clearly puts riders’ very lives at risk. Dr. O’Neil calls algorithms “toy models of the universe,” because these seemingly all-knowing entities actually only know what we tell them, and thus they have major blind spots.

If it is accepted that machines hold the same competences as humans, or even better competences than us, will we begin to reduce management accountability? Further questions: can there be an ethical use for AI, given the complexity of rulemaking, when something besides an intelligent human mind is expected to make rules? Where will the final say in intelligence lie? Why do we want machines to behave as we do, given that evidence already shows that machine learning can only learn as much as already exists in the data that trains it, and if the data reflects humans’ discriminatory behavior, then the algorithms, almost necessarily, will demonstrate or promote discrimination? The mythical invention of E. M. Forster’s all-encompassing machine in his classical science fiction story (1928/2011) was not, of course, subject to a range of ethical and moral review panels before all of humanity began to live within it under the Earth’s crust. As we enter a new era of AI, it will remain important to recall the tension points in positioning technologies into places of power in workplaces and maintain, rather than the looming horizon where machines are in command, a “human in command” (De Stefano, 2018) approach to rolling out any new technologies into workplaces. Human responses to this trend should involve careful regulation, in which human intelligence takes precedence, as the machine becomes increasingly evident in our working lives.

Some text presented here is adapted from P. V. Moore, “OSH and the Future of Work: Benefits & Risks of Artificial Intelligence Tools in Workplaces,” for EU-OSHA (2019).

Notes

Bibliography

—Agarwal, A., Gans, J., and Goldfarb, A. 2018. Prediction Machines: The Simple Economics of Artificial Intelligence. Boston, MA: Harvard Business Review Press.

—Berg, J. 2016. Income Security in the On-Demand Economy: Findings and Policy Lessons from a Survey of Crowdworkers. Conditions of Work and Employment Series No. 74. Geneva: International Labour Organization.

—Butollo, F., Jürgens, U., and Krzywdzinski, M. 2018. “From Lean Production to Industrie 4.0: More Autonomy for Employees?” Wissenschanftszentrum Berlin für Sozialforschung (WZB), Discussion Paper SP 111 2018–303.

—CIPD (Chartered Institute for Personnel Development). 2017. To Gig or not to Gig? Stories from the Modern Economy. Available at www.cipd.co.uk/knowledge/work/trends/gig-economy-report.

—Collins, L., Fineman, D. R., and Tsuchida, A. 2017. “People Analytics: Recalculating the Route.” Deloitte Insights. Available at https://www2.deloitte.com/insights/us/en/focus/human-capital-trends/2017/people-analytics-in-hr.html.

—D’Cruz, P., and Noronha, E. 2016. “Positives Outweighing Negatives: The Experiences of Indian Crowdsourced Workers.” Work Organisation, Labour & Globalisation 10(1): 44–63.

—De Stefano, V. 2018. “Negotiating the Algorithm: Automation, Artificial intelligence and Labour Protection.” ILO Working Paper no. 246/2018. Geneva: International Labour Organization.

—Degryse, C. 2016. Digitalisation of the Economy and Its Impact on Labour Markets. Brussels: European Trade Union Institute (ETUI).

—Desrosières, A. 2001. “How Real Are Statistics? Four Possible Attitudes.” Social Research 68(2): 339–355.

—EU-OSHA (European Agency for Safety and Health at Work). 2018. Foresight on New and Emerging Occupational Safety and Health Risks Associated with Digitalisation by 2025. Luxembourg: Publications Office of the European Union. Available at https://osha.europa.eu/en/tools-and-publications/publications/foresight-new-and-emerging-occupational-safety-and-health-risks/view.

—Feloni, R. 2017. “I tried the software that uses AI to scan job applicants for companies like Goldman Sachs and Unilever before meeting them, and it’s not as creepy as it sounds.” Business Insider UK, August 23, 2017. Available at https://www.businessinsider.in/i-tried-the-software-that-uses-ai-to-scan-job-applicants-for-companies-like-goldman-sachs-and-unilever-before-meeting-them-and-its-not-as-creepy-as-it-sounds/articleshow/60196231.cms.

—Forster, E. M. 1928/2011. The Machine Stops. London: Penguin Books.

—Frey, C., and Osborne, M. A. 2013. The Future of Employment: How Susceptible Are Jobs to Computerisation? Oxford: University of Oxford, Oxford Martin School. Available at https://www.oxfordmartin.ox.ac.uk/downloads/academic/The_Future_of_Employment.pdf.

—Hacking, I. 1986. “Making Up People.” In T. C. Heller, M. Sonsa, and D. E. Wellbery (eds.), Reconstructing Individualism. Stanford, CA: Stanford University Press, 222–236.

—Houghton, E., and Green, M. 2018. “People Analytics: Driving Business Performance with People Data.” Chartered Institute for Personnel Development (CIPD). Available at https://www.cipd.co.uk/knowledge/strategy/analytics/people-data-driving-performance.

—Huws, U. 2015. “A Review on the Future of Work: Online Labour Exchanges, or ‘Crowdsourcing’—Implications for Occupational Safety and Health.” Discussion Paper. Bilbao: European Agency for Safety and Health at Work. Available at https://osha.europa.eu/en/tools-and-publications/publications/future-work-crowdsourcing/view.

—IBM. 2018. “IBM Talent Business Uses AI to Rethink the Modern Workforce.” IBM Newsroom. Available at https://newsroom.ibm.com/2018-11-28-IBM-Talent-Business-Uses-AI-To-Rethink-The-Modern-Workforce.

—ILO (International Labour Organization). 2016. Final Report: Meeting of Experts on Violence Against Women and Men in the World of Work. MEVWM/2016/7, Geneva: ILO. Available at http://www.ilo.org/gender/Informationresources/Publications/WCMS_546303/lang–en/index.htm.

—ILO (International Labour Organization). 2017. “Ending Violence and Harassment Against Women and Men in the World of Work, Report V.” International Labour Conference 107th Session, Geneva, 2018. Available at http://www.ilo.org/ilc/ILCSessions/107/reports/reports-to-the-conference/WCMS_553577/lang–en/index.htm.

—Jimenez, I. W. 2016. “Digitalisation and Its Impact on Psychosocial Risks Regulation.” Unpublished paper presented at the Fifth International Conference on “Precarious Work and Vulnerable Workers,” London, Middlesex University.

—Kaivo-oja, J. 2015. “A Review on the Future of Work: Robotics.” Discussion Paper. Bilbao: European Agency for Safety and Health at Work. Available at https://osha.europa.eu/en/tools-and-publications/seminars/focal-points-seminar-review-articles-future-work.

—Kar, S. 2018. “How AI Is Transforming HR: The Future of People Analytics.” Hyphen, January4, 2018. Available at https://blog.gethyphen.com/blog/how-ai-is-transforming-hr-the-future-of-people-analytics.

—Locke, J. 1824. “An Essay Concerning Human Understanding.” Vol. 1, Part 1. The Works of John Locke, vol. 1. London: Rivington, 12th ed., 1689. Available at https://oll.libertyfund.org/titles/761.

—Miller, P. and O’Leary, T. 1987. “Accounting and the Construction of the Governable Person.” Accounting Organizations and Society 12(3): 235–265.

—Miller, P. and Power, M. 2013. “Accounting, Organizing, and Economizing: Connecting Accounting Research and Organization Theory.” The Academy of Management Annals 7(1): 557–605.

—Moore, P. V. 2018a. The Quantified Self in Precarity: Work, Technology and What Counts. Abingdon, UK: Routledge.

—Moore, P. V. 2018b. The Threat of Physical and Psychosocial Violence and Harassment in Digitalized Work. Geneva: International Labour Organization.

—Nield, D. 2014. “In Corporate Wellness Programs, Wearables Take a Step Forward.” Fortune, April 15, 2014. Available at http://fortune.com/2014/04/15/in-corporate-wellness-programs-wearables-take-a-step-forward/.

—Pasquale, F. 2015. The Black Box Society: The Secret Algorithms that Control Money and Information. Boston, MA: Harvard University Press.

—Porter, T. M. 1995. Trust in Numbers: The Pursuit of Objectivity in Science and Public Life. Princeton: NJ: Princeton University Press.

—Prassl, J. 2018. Humans as a Service: The Promise and Perils of Work in the Gig Economy. Oxford: Oxford University Press.

—PwC (PricewaterhouseCoopers). 2018. “Artificial Intelligence in HR: A No-Brainer.” Available at https://www.pwc.com/gx/en/issues/data-and-analytics/publications/artificial-intelligence-study.html.

—Rani, U., and Furrer, M. 2017. “Work and Income Security among Workers in On-Demand Digital Economy: Issues and Challenges in Developing Economies.” Paper presented at the Lausanne University workshop “Digitalization and the Reconfiguration of Labor Governance in the Global Economy.” November 24–25, 2017 (unpublished).

—Redden, C. 2019. Questioning Performance Management: Metrics, Organisations and Power. London: Sage Swifts.

—Taylor, M. 2017. Good Work: The Taylor Review of Modern Working Practices, London: Department for Business, Energy and Industrial Strategy. Available online at https://www.gov.uk/government/publications/good-work-the-taylor-review-of-modern-working-practices.

—The Week. 2015. “The Rise of Workplace Spying.” Available at http://theweek.com/articles/564263/rise-workplace-spying.

—TNO (Netherlands Organization for Applied Scientific Research). 2018. “Emergent Risks to Workplace Safety; Working in the Same Space as a Cobot.” Report for the Ministry of Social Affairs and Employment, The Hague.

—Woodcock, J. 2016. Working the Phones: Control and Resistance in Call Centres. London: Pluto Press.

Comments on this publication