In a 2013 experiment, Eyal Aharoni and his teams tried to predict the probabilities of prisoners, shortly to be released, to be in prison again inside the next four years. To do so, they took a sample of 96 male prisoners and let them execute computer tasks, which required quick decisions and so triggered impulsive reactions. Situations, where the subjects had no time to think about the consequences of their reactions.

While they had been participating in the experiment, the individuals had been connected to a computer to measure the electrical activity of their brains [1] via functional magnetic resonance imaging (fMRI). With this IT-infrastructure, the scientists want to understand if there is statistical relevant relation between the behavior of the brain’s anterior cingulate cortex (ACC), responsible for the impulse control, and the probability of a repeated legal violation.

The experiment came to the result that subjects with a relative low ACC-brain activity in the experience had twice the risk to violate the law inside the next four years. [2] As other experiments confirmed earlier, the human brain develops depending its usage, especially in younger years. So the AAC structure is partly based on what we learnt, we are not born like that. [3]Furthermore such brain tests does not automatically predict violations of laws and guidelines, but in this case the sample included a populations who already learnt such violations as possible behavior. Accordingly, the individuals could learn other scripts. With this, in a spontaneous situation the subject would not start the earlier script to violate the law, but instead start the new stronger one, to not do it and resist the temptation. Such a new script would have to include not to decide at once, but instead to start a decision making process. Such includes the long-term consequences (returning to prison), but also to build up empathy with the victims.

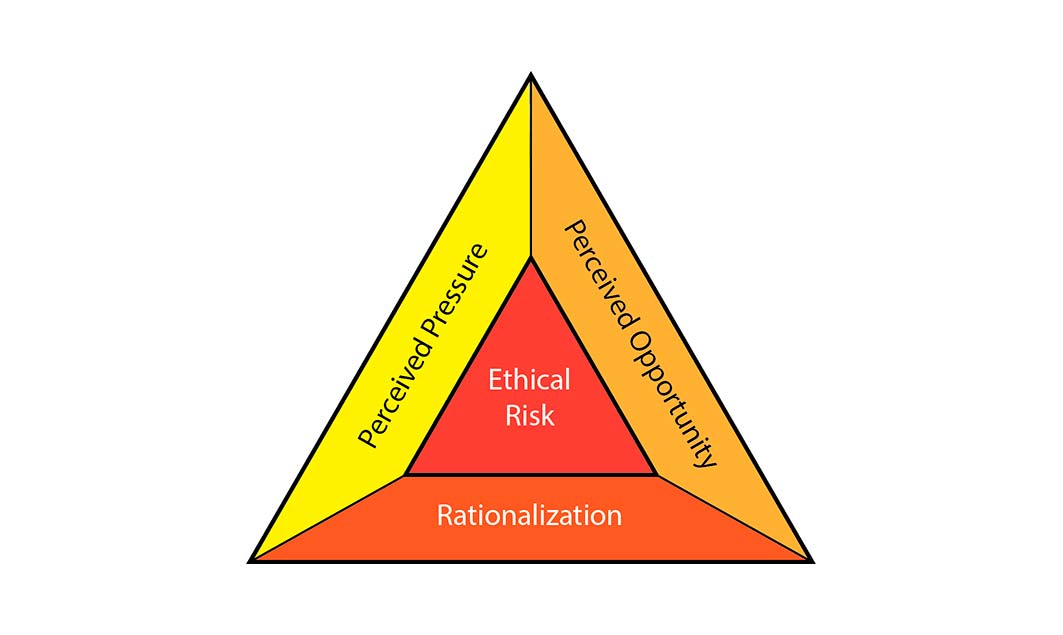

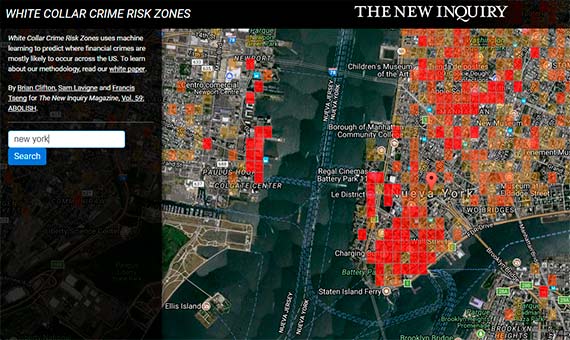

Due to Donald Cressey’s Fraud Triangle [4], a negative environment may tempt individuals to present a non-proper behavior. In theory, if a risk person enters a risky territory, the overall risk of a legal violation will rise. Today, different networks collect police information, like crimes and their locations to create a real-time public safety map.

Of course prohibited by Data Privacy laws, but technically it would be possible to collect AAF information at the company’s hiring tests. Due to such results, the company could decide, if the candidate is appropriate for the job function (and its risk levels) and furthermore receive tailor-made trainings. Taking the next step, these results could be combined with a real time risk map to predict the actual probability that the individual would break a guideline. As said before, this scenario is prohibited by actual Data Privacy laws.

Due to modern data privacy laws, such as the 2018 EU General Data Protection Regulation , it is required that a company is transparent for what purposes the collected information will be used. Due to this, it is not allowed to take information (including from the ACC-tests) from the hiring process and use them later, at least not if the individual does not authorized the organization to do so. But technically it would be possible to use this information to decide, if the candidate is appropriate for later job functions (and its risk levels) and furthermore receive tailor-made training. Taking the next step, these results could be combined with a real time risk map to predict the actual probability that the individual would break a guideline. As said before, this scenario is limited by local data protection laws.

Future technological possibilities make it imperative today to start the discussion about ethical consequences. We want avoid to hire an innocent person based on his brain waves, as they may predict a higher possibility of non-adequate behavior in stress situations, as such would have relevant negative consequences for the organization, or we believe in in the human and the principle that everyone is innocent until a crime could be proven? Furthermore as the last is predicted and not even committed. In fact we do not even have to start the discussion, as the author Philip K. Dick did this for us already in his 1956 short story “The Minority Report”.

Find the original text published here.

Patrick Henz

References

[1] Brain Box (2015): “What does fMRI measure?”

[2] Aharoni, Eyal / Vincent, Gina M. / Harenski, Carla L. / Calhoun, Vince D. / Sinnott-Armstrong, Walter / Gazzaniga, Michael, S. / Kiehl, Kent A. (2012): “Neuroprediction of future rearrest”

[3] Henz, Patrick (2017): “Access Granted – Tomorrow’s Business Ethics”

[4] Cressey, Donal (1973): “Other People’s Money: A Study in the Social Psychology of Embezzlement”

[5] Clifton, Brian / Lavigne, Sam / Tseng, Francis (2017): “Predicting Financial Crime: Augmenting the Predictive Policing Arsenal”

Comments on this publication