Financial innovation—once an unquestioned positive for any economy—has been much less celebrated since the financial crisis that began in 2007 and the Great Recession that followed in its wake. Some leading economists, notably Paul Volcker (former Chairman of the Federal Reserve), Paul Krugman (Nobel prize-winning economist at Princeton and a New York Times columnist) and Simon Johnson (former chief economist of the IMF), have each expressed skepticism about the social value of financial innovation in general, and with much justification, since some recent innovations helped lead to the crisis. More significantly, the sweeping Dodd-Frank Wall Street Reform and Consumer Protection Act enacted in the United States in summer of 2010 for the purpose of preventing financial crises or at least minimizing their harmful impacts, contains numerous provisions that, depending on how they are implemented by future regulators, could slow future financial innovation.

My thesis here is that financial innovation does not deserve all the blame that has been heaped on it. In fact, there have been a number of “good” innovations over the past several decades, though the critics are right that there have been “bad” ones as well. In this chapter I attempt to sort out the two, concentrating primarily on innovations introduced into the US market since the 1960s, the period in which Chairman Volcker, in particular, claims there has been little socially useful innovation. I conclude by offering some suggestions on how policy makers and regulators can best facilitate future “good” financial innovations while weeding out the “bad” ones before they can do much damage.

Financial vs. Real Sector Innovations: Are They Different?

Before I turn to the main topics of the chapter, however, it is important to address a threshold issue that lurks behind the critique of financial innovation: that it is somehow different from innovations in the real economy, and therefore deserving of more skepticism. Several such differences have been alleged. Do the allegations have some basis?

One common view, for example, is that financial innovation consists of little more than arbitrage, and that even successful trading strategies provide no net social value, since for every winner there is a loser. This view is misplaced. By definition, arbitrage eliminates differences in prices of like products or assets in different locations. Innovations that permit this to be accomplished more quickly and efficiently reduce costs, provide more accurate price signals at any point in time, and generally make markets more liquid and efficient. Added liquidity and efficiency, in turn, make it easier and less costly for firms to raise capital.

To be sure, there is something to the “zero-sum” critique of trading innovations. But as we will soon see, many useful financial innovations over the past several decades have little or nothing to do with trading.

A second very real difference between innovations in finance and those in the real sector is that the former are often more heavily leveraged, or financed with debt. Among many other things, the recent financial crisis has illustrated how dangerous excessive leverage can be. For example, the infamous collateralized debt obligation (CDO) that enabled far too many subprime mortgages to be originated and sold (with the mistaken blessings of the credit rating agencies) and which fueled the wider real estate bubble of the last decade, was a debt instrument that turned out to have largely destructive consequences. Likewise, the “structured investment vehicle,” or SIV, was one of the primary means by which large banks financed CDOs. I have more to say about both these innovations below.

By contrast, real sector innovation tends to be financed more by equity than debt. The “dot.coms” of the late 1990s, many of which disappeared rapidly when the stock market bubble popped in April 2000, got their start largely with equity provided by angel investors or venture capitalists, and later were financed through public stock sales. Although the stock market implosion caused significant losses to stockholders—and not just those with investments in the dot.coms—these losses for the most part were not compounded by leverage. As a result, the real sector fallout from the dot.com bust was far less damaging than the damage caused by the popping of the real estate bubble.

The third alleged difference between financial and real sector innovations is that the former are often said to be driven largely by the desire to circumvent rules and statutes, while the latter are supposedly motivated overwhelmingly or even entirely by innovators seeking to do or make something “faster, cheaper, better.” This distinction is not as clear as some may believe. Both financiers and manufacturers and service providers engage in games of “cat and mouse” with their regulators. Moreover, these games are not necessarily socially pernicious. To the contrary, efforts to get around “bad” or inefficient rules—such as the Depression-era limits on interest that banks could pay their depositors—are to be applauded. Other efforts (such as the creation of the supposedly off-balance sheet SIVs by banks) that get around “good” rules, such as capital standards, deserve condemnation.

My bottom line: when financial innovation leads to a better, faster or cheaper outcome, it is no different from real sector innovation. It is only when financial innovation is defined by or augmented by leverage that it can significantly differ from and be more dangerous than real sector innovation.

Why Does Financial Innovation Matter?

Financial institutions, markets and instruments perform four broad social and economic functions. Innovations that improve the way these functions are carried out, by definition, are useful. Others may temporarily appear to be improvements, but in fact turn out to have socially pernicious collateral effects. Before I give specific examples of both types, it is important to know what these functions are.

The first function of finance is to provide a means of payment and storing wealth. What we know as “money” was first embodied in coins, livestock and foodstuffs, later in paper, and then in bank checking accounts. More recently, “money” has become digitized, found on general purpose credit cards (first introduced by American Express and Bank of America in 1958), and is transferred in large wholesale amounts electronically, through automated clearinghouses, the Fedwire system operated by the Federal Reserve (which also plays a central role in clearing checks written on commercial banks), and among major banks in the United States and other developed economies through the Clearinghouse for Interbank Payments System (CHIPS).

Second, by offering methods for earning interest, dividends and capital gains on monies invested in them, financial institutions and instruments encourage saving. Saving is socially important because it funds investments in physical and human capital that in turn generate higher incomes in the future. By the 1960s, most of the major institutions that exist today for facilitating saving were already in place: banks (and savings and loans, or specialized banks placing deposits in residential mortgages), insurance companies, mutual funds (but not yet money market funds or the increasingly popular index funds, each discussed below), and corporate pension plans. In addition, investors who had the time and the means could buy various financial instruments directly: government or corporate bonds and corporate stocks.

Third, financial institutions and markets, if they are working properly, channel savings, whether by domestic or foreign residents, into productive investments. Well before the 1960s, it was commonplace for companies wanting to build new buildings or to purchase new capital equipment, to borrow the funds from banks or insurers (whose primary functions as “financial intermediaries” are to direct the funds placed with them into private and public sector investments), or to issue new bonds or even to sell additional stock. The US government was instrumental in facilitating investment in residential housing by aiding the mortgage market. In the 1930s, the government established the Federal Housing Administration to insure mortgages taken out by low and moderate income households and the Federal Home Loan Bank System. Later and over time, the federal government launched Ginnie Mae, Fannie Mae and Freddie Mac to provide a secondary market for most mortgages. Likewise, the government facilitated investment in education, or “human capital” by guaranteeing loans for post-secondary education. And, of course, the US government funded some of its own investment activities—such as the construction of the interstate highway system and the support of much scientific research—in part by issuing bonds (when tax revenues were not sufficient to pay for all this). But with the exception of a few small “venture capital” limited partnerships, to be discussed shortly, the US financial system had yet to develop by the end of 1960s a reliable institutional way to fund the inherently risky process of firm formation and initial growth.

A final, and sometimes overlooked, essential function of finance is to allocate risks to those who are most willing and able to bear them. This function is sometimes confused with the belief that finance reduces overall risk. Clearly it does not, and cannot. Instead, the best that finance can do is to shift risk to those who most efficiently can bear it and to spread it out so that it is not unduly concentrated among a handful of parties. One of the most important lessons from the financial crisis is that the kinds of “securitization” that made subprime lending possible did not end up de-concentrating risks of default of the underlying mortgages, as so many market participants and other analysts (including me) argued or expected.

As of the 1960s, insurance companies dominated the risk-bearing/allocation function of finance by underwriting various personal (auto, house, life, and health) and commercial (primarily property-related) risks. But insurance for financial risks was limited. Insurers were willing to bear the risk of default of municipal and state bonds and certain other financial instruments, but generally no other financial risks. Futures contracts for various commodities, which had long been present, were available on futures exchanges, but there were no widely-used mechanisms yet for insuring against other kinds of financial risks—due to fluctuations in interest rates, currencies (there was no need for this as exchange rates between currencies were fixed under the post-war Bretton Woods agreement), or stock prices (of individual companies or indexes). As will be discussed in the next section, various financial innovations over the past several decades have filled this gap.

Assessing Recent Financial Innovations

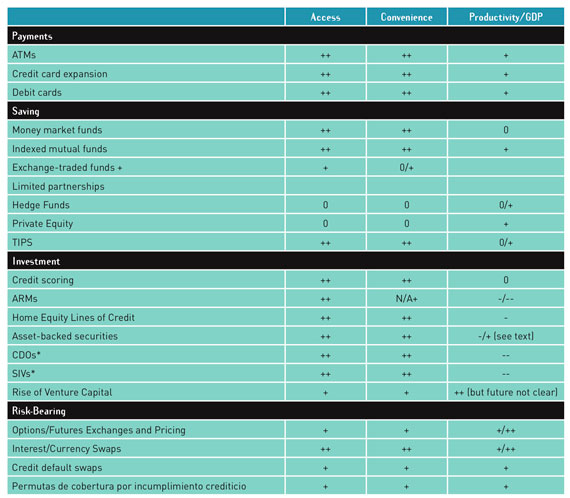

It is now time to list and assess specific financial innovations since the 1960s, or around the time that the ATM machine, the one financial innovation celebrated by Chairman Volcker, was introduced into the US banking system. I assess the innovations qualitatively along three dimensions: the degree to which they widen access to a particular financial service, enhance convenience of users, and add to or detract from GDP or productivity. For each dimension, I give scores ranging from — to ++ on what I believe to be the net impacts. Table 1 summarizes the results of my assessments, which I now describe verbally with respect to the innovations relating to each of the four financial functions just identified.

Table 1. Scoring Net Impacts of Recent Financial Innovations: A Summary

temporary

Source: Analysis in text.

Innovations in Payments

The ATM machine is not the only socially useful innovation in payments in recent decades. As noted, general purpose credit (and debit) cards—both introduced since the 1960s—have become key parts of the payments systems in developed economies, and recently, some emerging markets (notably China). On balance, despite some continuing complaints about credit card disclosures and some pricing practices, these “plastic monies” have been welfare-enhancing. Credit and debit cards have not only become more convenient and in some respects safer than money (in the United States, consumers are liable for only $50 for fraudulent uses or stolen cards, whereas they can lose all the money they carry if they are robbed). Credit cards also permit users to borrow, which has widened consumers’ access to unsecured credit, at lower cost, than was previously available, if at all, in grey markets, pawn shops or loan sharks. In the United States in particular, credit card borrowing also has enabled many entrepreneurs to launch their businesses at a scale beyond what would be possible through the entrepreneurs’ liquid net worth alone. Perhaps more for this reason than any other, credit cards in this fashion have made some positive contribution toward long-run economic growth.

Savings Innovations

Up until the 1970s, American retail investors (and those outside the United States) had relatively limited choices about where to put their savings: in bank deposits or their functional equivalents, in bonds, stocks of individual public companies, and a limited range of mutual funds. A series of financial innovations since then, driven in part by interest rate deregulation and in part from the commercial application of academic insights, have greatly expanded the range of available options.

The new choices include: money market mutual funds (developed as a way of circumventing interest- rate controls on bank deposits, a clear example of a “bad” regulation), indexed mutual funds (originating from the academic insight that actively managed funds rarely outperform the indexes), exchange traded funds or “ETFs” (a cost-saving innovation developed by the financial industry), financial limited partnerships, such as hedge funds and private equity funds (“alternative” assets that until relatively recently outperformed more liquid stock portfolios, though often because they are leveraged), and inflation-protected government bonds or “TIPs” (promoted by academic economists, first adopted in the United Kingdom in the 1980s and roughly ten years later, by the United States).

Broadly speaking, these savings vehicles have expanded access and convenience for investors, while most likely modestly enhancing economy-wide GDP. All of this occurred even while, in the United States, the private savings rate itself declined to roughly 0, most likely because households counted on the rising values of their homes to add to their wealth, a belief that had some validity until the real estate bubble popped in 2006-2007. Since the recession and the steep fall in both real estate and stock prices that followed, private savings rates have rebounded somewhat. Through mid-2010, when this chapter was written, the broader range of savings choices does not appear to have given much comfort to many newly risk-averse households, perhaps with the exception of TIPs and some commodity ETFs. These two instruments have come to be viewed as hedges as against the possible future inflation that may eventually follow in the wake of the significant monetary easing engineered by central bankers in the 2008-09 period to keep developed economies from falling even deeper into the recession triggered by the financial crisis.

Intermediation-Related Innovations

Ask most economists about the function of finance they believe to be most important, and the answer they will most likely give is the effective translation of savings into socially productive investments. Yet it is the innovations in financial “intermediation” that have proved to have had the most mixed record of all of the innovations surveyed in this chapter, and largely for this reason, these innovations deserve more discussion than the others.

In retrospect, the mixed record of the intermediation-related innovations is due overwhelmingly to what turned out to be hugely costly innovations in housing finance in particular. The outcome was not an accident, however, as it was heavily influenced if not directly promoted by US government policies that took the advancement of home ownership too far and by regulatory policies and attitudes that failed to police obviously unproductive innovations. To whom should blame then be assigned: the unwelcome innovations or the policies that facilitated if not directly led to them? The answer, of course, is both.

The general story of how all this happened is by now, of course, well known. For decades since the Depression, the US government has adopted a variety of measures to promote home ownership, including the creation of agencies to insure, purchase, and guarantee securities backed by residential mortgages. In addition, the federal tax code has long permitted taxpayers to deduct the interest paid on home mortgages. In 1978, the federal government encouraged depository lenders to extend credit to lower-income households and to other borrowers in low-income neighborhoods.

For years, this combination of incentives and mandates steadily lifted the home ownership rate to roughly 64-65% of all households by the mid-1990s. Both the Clinton and Bush Administrations, as well as Congress, wanted that rate to go still higher, premised largely on the notion that home ownership yielded important externalities: homeowners tend to take greater care of their homes and have more interest in the welfare of their neighborhoods than renters. In addition, the Bush Administration in particular viewed home ownership as one, albeit very important, element of its larger interest in developing a broad “ownership society.” On this view, the greater the ownership interest people have in a broad array of assets—homes, companies, and the like—the greater will be their affinity for market-oriented policies.

Whatever the precise reason or mix of reasons, the strong bipartisan consensus favoring increased home ownership required that mortgage credit be made available on affordable terms to individuals and families with lower and less stable incomes than those who had earlier borrowed money to buy a home. This outcome, in turn, could only be realized if mortgage underwriting standards and down payments were relaxed for these “subprime borrowers.”

The financial industry responded with a series of innovations, encouraged by federal policy, but also only made possible by a record bubble in housing prices to which both the innovations and the policies they fostered contributed. In combination, these innovations gave millions of Americans with less-than-stellar credit histories, many (but not all) with low incomes, access to mortgage credit, eventually pushing the home ownership rate to 69% of all households. This feat could not have been accomplished unless each and every one of the innovations about to be described had been developed and successfully marketed. In fact, some of these innovations were innocuous or mildly constructive on their own but in combination, they proved to be deadly dangerous to the financial system and ultimately to the rest of the economy:

- To give subprime borrowers access to seemingly “affordable” mortgages, the mortgage lending industry (banks to a limited extent, but most importantly, a new class of mortgage lenders that were not adequately supervised or regulated by federal authorities) invented a new variation of the adjustable rate mortgage which charged borrowers very low initial “teaser” interest rates that reset to rates substantially higher than the Treasury benchmark rate several years later when presumably higher real estate prices would permit the borrowers to easily refinance. In fact, this Ponzi-like scheme worked, up until about 2006, when home prices quit rising, which triggered higher subprime mortgage defaults, and eventually a full-blown financial crisis.

- Mortgage lenders would not have been comfortable extending subprime loans without the invention and later propagation by both major commercial and investment banks of a new financial instrument—the collateralized debt obligation (CDO). The CDO transformed what once was a socially productive innovation, a “mortgage-backed security” (MBS) backed by a pool of mortgages extended to prime quality borrowers, and turned it into a financial Frankenstein. The CDO’s chief “innovations” included the use of newer securities backed by subprime (instead of prime) mortgages (increasingly underwritten without verification of the borrowers’ income or job history) and the slicing of the cash flows from those securities into different classes or “tranches” (as they came to be called) designed to appeal to investors with different appetites for risk. Those investors wanting the safest instruments got first rights to the cash flows from the mortgages, while investors in less safe, but higher-yielding tranches had later claims. CDOs were central to the over-development of the subprime mortgage market because they allowed the originators of the mortgages to offload them (and thus not care about their quality) to the buyers of the different tranches of the securities.

- CDOs, and specifically the first and supposedly their safest tranches, could not have been sold, however, without other parties and still other innovations. Even with their first call on the cash flows, the first tranche of the CDOs would not have been attractive to risk-averse, yield-hungry investors (who were starved for safer, higher yielding securities in the low-interest environment engineered by the Federal Reserve to sustain the recovery) unless the ratings agencies bestowed their coveted AAA ratings on these particular securities (the lower ratings for the riskier tranches were less important to the other investors with higher risk tolerances). When the CDOs were first developed, the ratings agencies balked at doing this—the right instinct because the mortgages, after all, were subprime, and the agencies had no actuarial data of how these mortgages fared over an entire business cycle—but were ultimately persuaded when the banks and their allies made use of yet another recent innovation, the credit default swap. Although it has been much maligned in the popular press and in some journalistic accounts of the financial crisis, there is nothing inherently evil about the CDS itself: it is, after all, the functional equivalent of insurance and it is precisely for this reason that when packagers of CDOs added CDS protection, or even explicit bond insurance, to the first tranches, that the ratings agencies then awarded their AAA ratings. The CDS later became infamous, not because of its flawed design, but because one of its largest issuers, AIG, failed to provide sufficient collateral when it was due under the terms of the contract. But not all CDO tranches (or even all CDOs) had protection from CDS or bond insurance, and thus investors in them lost heavily when subprime mortgages began defaulting after residential real estate prices quit climbing and started to plummet. Ultimately, therefore, by facilitating the unbundling of mortgage origination from the risk of holding the mortgages to maturity, CDOs greatly weakened, if not destroyed, lenders’ incentives to underwrite mortgages prudently.

- One other dangerous financial innovation helped make the subprime mortgage debacle possible: the creation and greatly expanded use of the “structured investment vehicle” by some of the largest commercial banks active in creating and marketing CDOs and other risky asset-backed securities. SIVs were an entirely legal, ostensibly off-balance sheet, way for the banks to park their CDOs for sale to the public without having to raise or hold additional bank capital, as was required by the prevailing bank capital rules. The SIVs had an Achilles heel, however: they were funded almost entirely (except for a small sliver of capital supplied by the banks and outside investors) by short-term commercial paper, which although it was secured by the CDOs and other assets, proved to be highly susceptible to a creditor “run”. In fact, this is just what happened in mid-2007: when the market value of this collateral began to fall as home prices turned down, buyers of this “asset-backed commercial paper” refused to roll it over or buy new issues. This triggered a wider run on many bank-sponsored SIVs, and after an aborted attempt by the Treasury to organize an industry-financed bailout of all major SIVs, the banks wound down their SIVs and assumed their assets, and more importantly their liabilities.

In sum, SIVs proved to be temporary, but ultimately flawed, vehicles for financing CDOs before they could be off-loaded to third-party investors. In this activity, they were joined by CDOs, teaser rate ARMs, and other mortgage-financing innovations that for a time provided access to mortgage credit to a wider pool of homebuyers with sub-par credit histories. In combination, these innovations fueled the bubble in real estate prices generally that eventually popped and brought the whole subprime mortgage experiment to an abrupt and highly painful halt.

Intermediation-related innovations, including some affecting mortgage lending, have not all been socially destructive, however, and thus this review would not be complete without mentioning the few that have made financial intermediation more efficient and improved social welfare during the past several decades.

A good place to start is with the securitization process, which began with mortgages in the early 1970s and later spread to other assets that served as collateral. The fundamental idea behind securitization—standardizing loans and using them to back securities to attract financing from the capital markets and not simply from banks—was and remains sound. A broader pool of financing, even without an implicit government guarantee, leads to modestly lower interest rates and thereby facilitates investment. But the subprime mortgage debacle revealed a significant downside of this new “originate-to-distribute” lending model. The separation of the origination of loans from those who ultimately hold them undermines incentives of the originators to be prudent. To some degree, securitization contracts address this problem by giving those who buy the loans rights to “put” them back to the originators under certain conditions. But these conditions are narrow and often contested. The better answer is for originators and securitizers to retain some credit risk—the Dodd-Frank bill requires 5% except for securities meeting high underwriting standards—so that both have stronger incentives for careful underwriting.

A second, related financial innovation is the development and now widespread use of credit scoring algorithms for personal and business borrowers. Credit scores have improved lenders’ ability to predict and thus to better price risk through the interest rates they charge on loans. Credit scoring has widened availability of credit and, by making credit ratings more objective, has reduced (though not entirely eliminated) racial discrimination by lenders.

Finally, there has probably not been an iconic financial innovation over the past four decades in the US that has more greatly improved the allocation of savings toward productive investment than the formalization and subsequent rise of the venture capital industry, and to a lesser extent, of angel investing (equity injections in startups by wealthy individuals or groups of them). Venture capital firms—limited partnerships managed by the venture capital general partner(s)—have been rightly credited with having given birth to some of America’s most famous companies, including Google, eBay, Amazon and Genentech, among others. Over the past decade, especially since the bursting of the Internet stock bubble, venture capitalists have moved away from “seed” investing—providing the initial equity to help launch companies—toward less risky later financing “rounds.” Not surprisingly, returns for limited partners in VC have plummeted. Accordingly, after its remarkable successes of the 1980s and 1990s, the VC industry is now at a crossroads. Not only do few VCs provide seed capital of any kind, but the industry is wary of financing capital-intensive startup firms, such as those attempting to develop new drug therapies or “clean energy” alternatives to carbon fuels. Seed financing arrangements therefore are a ripe area for future innovation.

Financial Innovations and Risk-Bearing

The fourth key function of finance is to spread or allocate risk to those parties most willing and able to bear it. In recent decades, a variety of derivatives—financial instruments whose value depends on some other “underlying” asset—have proliferated to take on this role: exchange-traded stock options and financial futures and “over-the-counter” swap arrangements (relating to exchanges of cash flows with different interest rates; in different currencies; and to possible loan defaults, or “credit default swaps”).

Derivatives have also become a media poster child for what supposedly went wrong in the financial crisis. Mostly, this is because one of the largest sellers of mortgage credit derivatives in particular, AIG, was brought to its knees in the fall of 2008 and was essentially taken over by the federal government (through a massive bailout orchestrated by the Federal Reserve) in an effort to contain systemic risk when that risk was at its peak. Mortgage-related credit default swaps also seemingly played the villain in Michael Lewis’s highly popular and well-researched book, The Big Short, which contributed to the popular ire over this particular financial innovation.

Several points should be made about financial derivatives to put them in their proper perspective. First, financial derivatives are hardly new: options (the right to buy a product or instrument at a fixed price by a certain date) and futures (which require the holder to buy or sell the product or instrument at a fixed price at the maturity date) are several centuries old, and neither played any role in the recent financial crisis. Second, the vast majority of the hundreds of trillions of dollars (in nominal or face value) of the newer financial derivatives—those whose value is tied to movements in interest rates and currencies—also played no role in the crisis. Furthermore, these derivatives have been socially constructive: both interest rate and currency swaps permit parties with different risk preferences to act on them, without having to sell the underlying instruments (loans or bonds) to which the swaps refer.

Third, even the credit default swap, perhaps the most maligned of all financial derivatives, is fundamentally a constructive innovation. As a device to insure against loan default, the CDS affords a way for various parties—lenders, suppliers, customers, and others—to hedge against a very specific adverse event. Even when these swaps are bought by “speculators,” or those without an economic interest in the underlying debt, they serve a useful function, as long as those selling these instruments have posted adequate collateral or margin and have sufficient capital to honor the contracts (as AIG did not). For one thing, without speculators, as in options markets where buyers typically do not own the underlying security on which the option is based, hedgers would have much more difficulty finding counterparties. Perhaps just as important, because CDS markets are typically far more liquid than are the markets in the underlying loans or bonds, CDS prices—which reflect the views of both hedgers and speculators—provide more accurate, timely market-based signals about the financial health of the company (or other issuer) whose debt is subject to the swap, than the market prices of the debt itself.

Fourth, the mortgage-related CDSs that have attracted so much attention and notoriety account for less than 5% of the overall debt market. Virtually all other CDSs relate to corporate bonds or loans.

Still, over-the-counter (OTC) markets for financial derivatives are far from perfect. They are dominated by a handful of dealers, prices are less than transparent and not timely, and as the AIG episode demonstrated, they are subject to breakdown if one or more large participants cannot honor their obligations. These problems should be largely, if not entirely, addressed once all the regulations relating to OTC-traded financial derivatives in the Dodd-Frank financial reform bill are developed and implemented. In particular, the bill’s requirement that standardized OTC financial derivatives of all types must be cleared by a central clearinghouse should remove the risks of cascading defaults entailed when derivatives contracts are bilateral and the counterparties can look only to each other for performance—and not to a central organization with the ability to set and enforce margin and collateral requirements. In addition, the bill requires such standardized contracts to be traded on quasi-exchanges (“swap execution facilities”, a term yet to be defined by regulators) and for their prices to be reported more frequently than is currently the case. Better transparency, in turn, will make it easier to specify appropriate margin requirements and thus further reduce—beyond central clearing itself—the potential for system-wide collapse if one or more key derivatives participants are unable to honor their obligations.

In sum, financial derivatives enhance the ability of both financial and non-financial parties in an economy to hedge and control their financial risks. That some parties may use these contracts to bet correctly on the failure of particular companies or entire markets—as was the case with subprime mortgages—does not disprove this central proposition. In all markets there are winners and losers, but the fact that there are both does not condemn markets as institutions. Financial derivatives are no exception.

However, recent events have underscored the possibility that precisely because derivatives have become such important financial instruments, and that some of the parties who trade them are heavily interconnected with each other and with the rest of the financial system, it is essential to have an appropriate infrastructure to ensure that performance difficulties of one or more parties do not spill over and threaten to destroy the viability of the entire system. Recent legislative reforms in the United States, likely to be emulated in other countries, should substantially mitigate this risk.

Public Policy toward Financial Innovation in the Future

The US Congress enacted and the President signed into law in the summer of 2010 the Dodd-Frank bill, the most sweeping financial reform legislation enacted since the Depression. This is not surprising. The financial crisis of 2007-2009 was also the most traumatic such event since the 1930s, and it would have been shocking had Congress done nothing in response.

Debate will surely continue for years over the merits of Dodd-Frank, as it was enacted and more importantly, as it will be implemented through the more than 200 rulemakings that will eventually convert its generally ambiguous statutory language into more concrete regulatory guidance. As regulators go about their jobs, and as future legislators consider tweaks or possibly major changes to Dodd-Frank, their attitudes toward financial innovation will be critical.

For example, if a skeptical view of financial innovation takes hold—either because the benefits of innovation are perceived to be presumptively small and/or the risks of catastrophic damage are feared to be non-trivial—then policymakers (and even voters) are likely to demand some sort of pre-emptive screening and possibly design mandates before financial innovations are permitted to be sold in the marketplace. This attitude would put regulators rather than the market in charge of screening innovation, a process that runs significant risks of chilling innovations before they have even had the chance to be tested in the marketplace. Conversely, a more open, wait-and-see approach to innovation would wait for the innovations to emerge and then only regulate them if they generated costs greater than their benefits. This has been the reining-in approach to financial innovation so far, and it clearly is the way US policy has generally handled innovation in the real sector of the economy. A wait-and-see policy gives the market the first crack at screening innovations, but runs the opposite risk from the preemptive approach: if regulators are late to act, because of their own laxity or due to strong political counter-pressures, they can permit socially harmful innovations to wreak considerable havoc before they are reined in.

In some areas of life, of course, it is appropriate for policymakers to take a skeptical approach toward innovation. The concern about possible catastrophic outcomes is the reason Congress established the Food and Drug Administration, requiring among other things, that new drugs be tested extensively in both animals and humans, before they can be sold to consumers. Analogously, the dangers of a core meltdown of a nuclear reactor, however remote, have driven policymakers from the very beginning of the nuclear age to require the utilities that construct such facilities to comply with specific design and performance standards. The European Commission has gone further by adopting the “precautionary principle” in a number of arenas—environmental policy, for food products, and consumer protection generally. Although this principle has been applied differently in different contexts, it essentially means that where there are plausible grounds for believing that a new (or currently-existing) product or activity poses a risk to human health or the environment, policymakers can regulate it in advance (or even ban it).

With the narrow exceptions of pharmaceuticals and nuclear power, however, US regulatory and social policy has not followed the precautionary principle, and thus has so far taken a very different course from that in Europe. Government regulates only once evidence of detrimental side-effects becomes reasonably clear, and then, where the underlying statute permits, only when the benefits of regulating outweigh the costs, and the content of the regulation represents the least costly way to achieve those benefits.2

So, which model should apply to financial innovation in the future: the preemptive drug/nuclear power approach, or the wait-and-see policy generally pursued in most other contexts? Regulators in the United States and elsewhere will be wrestling with this question in many contexts for years to come. Despite the clear damage caused by this most recent crisis, I believe that, generally speaking, financial innovation should continue to be screened by the market first and regulators later, for at least two reasons.

First, unlike in the pharmaceutical industry, it is difficult if not impossible to conduct clinical trials in the financial sector. New drugs can be and are tested on sample populations, first for their safety and then their efficacy. If they pass both tests, the FDA makes the reasonable assumption that the behavior of the drug among a representative population may be extrapolated to the larger population. In contrast, while one theoretically could test a new financial product—say, a mortgage, on a sample population—its behavior among that sample is likely to be heavily time-dependent. Subprime, adjustable-rate mortgages with low initial “teaser” interest rates that were extended in 2002 and 2003 may have been quite safe because real estate prices then were rising, enabling borrowers to refinance at a later point. But the very same mortgages extended in 2006 or 2007, just when real estate prices had peaked, would and did have a different delinquency record. In short, the results of a “clinical test” of a financial product at a particular point in time cannot be safely extrapolated for all time.

One possible response to this problem, of course, would be to test new financial products over an entire business cycle before letting them on the market. But this would subject such products to substantial delay, and thus surely cut down on incentives of financial institutions to innovate.

Second, the danger that preemptive screening will chill productive innovation, even without the kind of regulatory delay just suggested, is another powerful reason to generally reject the preemptive approach to regulation in the financial arena. Thus, had US policymakers followed the precautionary or preemptive approach rather than a “wait and see” policy to regulation, it is conceivable that many of the innovations that make up modern life today would have been introduced much later, or even not at all: the automobile (with side-effects of more than 40,000 auto related deaths a year), the airplane (which has its share, albeit much lower than the car, of fatalities), and even the Internet (which is used by terrorists and criminals, not just ordinary citizens).

Advocates of preemptive screening in the financial arena no doubt will argue, possibly taking their cue from Paul Volcker’s skepticism toward the social value of financial innovation in general, that because the benefits of innovation in the financial arena are likely to be less than in the real sector and the dangers of harmful innovation much greater, financial innovation therefore should be treated differently for regulatory purposes than real sector innovation. My foregoing summary of financial innovations provides a more optimistic assessment than this view. Moreover, the case for preemption wrongly assumes, in my view, that “wait-and-see” regulation cannot be improved.

I believe it can, in part precisely because regulators made mistakes, which they have admitted, in the run-up to the most recent crisis. One of the silver linings of the crisis is that it has demonstrated to elected officials the dangers of interfering with regulatory attempts to clamp down earlier on products and practices that permit asset bubbles to unduly expand and later pop, with devastating consequences. At least for a good long while, regulators—specifically the new Systemic Risk Council of regulators in the United States created by the Dodd-Frank bill—will have not only greater freedom to act, but the legal duty to do so to prevent future bubbles, especially those fueled by leverage, from getting out of hand.

Another lesson from the recent crisis is that potentially dangerous bubbles may be forming when particular asset classes or financial instruments are rapidly growing. Future regulators would be greatly aided in their efforts to identify and prevent future bubbles and thus possible sources of systemic crises by harnessing important market-based signals of distress, such as those provided by the markets in credit default swaps, which regulators can use to justify their own early, preventative actions. Indeed, this potential use of CDSs is an important reason why attempts by regulators in some countries to ban or limit “naked” CDSs or short selling are severely mistaken. They punish the messenger, when in fact we need more market-based messaging to help guide regulators and policy markets.

Finally, notwithstanding the strong general case for a wait-and-see approach to financial regulation, there may be certain aspects of finance where the more intrusive preemptive approach toward innovation may be warranted. One such appropriate area involves financial products involving long-term contracts entered into by consumers, such as mortgages (when borrowing) or annuities (for retirement). There is a growing literature in behavioral finance indicating that individuals are not always rational in their investment decisions. This tendency is dangerous when even well-informed individuals are making long-term financial commitments, with heavy penalties (in the case of mortgages) or perhaps no exit strategies (in the case of annuities) for changing one’s mind later. In these cases, preemptive approval of the design of the financial products themselves may be necessary to prevent many consumers from locking themselves into expensive and/or potentially dangerous financial commitments. But this exception should remain that way and not become the rule.

In sum, a balanced look at financial innovation over recent decades reveals a more positive picture than has been painted by some skeptical observers. But regardless of how one assesses past financial innovation, the recent crisis teaches us that policy makers must stand readier to correct abuses when they appear and not let destructive financial innovations wreak the kind of economic havoc that we have unfortunately just witnessed.

Notes

- Senior Fellow, Economic Studies Program, The Brookings Institution and Vice President, Research and Policy, The Kauffman Foundation. Excellent research assistance by Adriane Fresh is greatly appreciated. This chapter is based on an earlier, longer essay, “In Defense of Much, But Not All, Financial Innovation,” available on the website of the Brookings Institution, at www.brookings.edu.

- The balancing of benefits and costs and the least-cost requirement for regulation have been embodied in one fashion or another in Executive Orders since the Ford administration.

Comments on this publication